Large Language Models in Bioinformatics: A New Era of Discovery?

December 19, 2024Unlocking the Power of Large Language Models in Bioinformatics

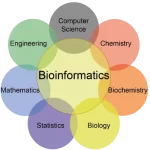

Large Language Models (LLMs), like GPT variants, have rapidly advanced the field of artificial intelligence, enabling groundbreaking applications across industries. Bioinformatics—a discipline that combines biology, computer science, and statistics to analyze complex biological data—is now witnessing the transformative potential of LLMs. A recent study highlights the capabilities and limitations of LLMs in bioinformatics tasks, shedding light on how these models can contribute to this rapidly evolving field.

The Advent of AI in Bioinformatics

LLMs are trained on vast datasets to generate human-like text and solve problems via natural language prompts. This unique ability has already demonstrated value in diverse fields like medical education, computational linguistics, and data-driven research. But how do these models fare in bioinformatics, where precision and complexity often intersect?

The study evaluated LLMs such as GPT-4 and GPT-3.5 across various bioinformatics tasks, including coding region identification, peptide analysis, molecular optimization, and text mining. The findings reveal both opportunities and challenges, marking the beginning of a new era in bioinformatics research powered by AI.

Assessing LLMs for Key Bioinformatics Tasks

The study focused on five core bioinformatics tasks, each critical for advancing biological research and medical innovation:

- Identifying Coding Regions in DNA

LLMs analyze DNA sequences to locate coding regions by identifying start and stop codons, a fundamental step in understanding gene expression. - Detecting Antimicrobial and Anti-cancer Peptides

These peptides hold promise as therapeutic agents. Accurate identification by LLMs could streamline the drug discovery pipeline. - Molecular Optimization

By generating and modifying molecular structures, LLMs can improve properties like synthetic accessibility and drug-likeness. - Gene and Protein Named Entity Recognition

Extracting gene and protein names from scientific literature is crucial for biomedical text mining, but the task is complicated by inconsistent nomenclature. - Educational Problem Solving

From string algorithms to probability questions, LLMs can address bioinformatics challenges, offering utility in educational contexts.

The researchers converted biological sequences into text prompts compatible with LLMs, enabling the models to generate relevant predictions in a bioinformatics context.

Strengths and Shortcomings of LLMs in Bioinformatics

Strengths:

- Success in Basic Tasks: LLMs performed well on simpler tasks, often matching or exceeding traditional methods. For example, GPT-4 successfully identified coding regions in DNA sequences and generated algorithms for sequence analysis.

- Fine-Tuning Capabilities: Fine-tuned models like GPT-3.5 (Davinci-ft) outperformed specialized models in specific tasks, such as identifying antimicrobial peptides.

- Prompt Engineering: The study demonstrated that carefully designed prompts, especially using techniques like chain-of-thought reasoning, significantly enhanced model performance.

- Versatility: LLMs showcased potential across a wide range of tasks, from functional genomic analysis to educational applications.

Limitations:

- Complex Task Challenges: On tasks requiring deep domain knowledge, like molecular optimization or entity recognition, LLMs sometimes produced errors or incomplete solutions.

- Model Variability: Performance varied across models, with GPT-4 outperforming GPT-3.5 and Llama 2 (70B) lagging in some tasks.

- Conservative Approach: LLMs tended to be cautious in molecular optimization, making them less effective than specialized models like Modof in optimizing key drug properties.

Applications and Future Potential of LLMs in Bioinformatics

Despite their limitations, LLMs have demonstrated transformative potential in several bioinformatics areas:

- Genome Analysis: Identifying coding regions in DNA sequences can accelerate advancements in functional genomics.

- Drug Discovery: LLMs can aid in drug screening by identifying therapeutic peptides and optimizing molecular designs.

- Biomedical Text Mining: Improved entity recognition capabilities can streamline the extraction of critical information from scientific literature.

- Education and Training: LLMs can serve as valuable tools for teaching bioinformatics concepts and solving complex problems in academic settings.

Challenges and Ethical Considerations

While LLMs offer immense promise, their integration into bioinformatics must address key challenges:

- Training Data Transparency: Lack of visibility into pre-training datasets raises concerns about data bias and reproducibility.

- Rapid Model Evolution: As LLMs are frequently updated, ensuring consistency and reliability in bioinformatics workflows will be crucial.

- Ethical Use: The adoption of AI in biological research necessitates safeguards to prevent misuse and ensure data security.

Conclusion: A New Frontier in Bioinformatics

Large Language Models have opened up exciting possibilities for bioinformatics, enabling researchers to tackle complex problems with unprecedented efficiency. From drug discovery to genome analysis, LLMs are poised to revolutionize the field. However, their successful application will require careful consideration of their limitations, ongoing refinement, and ethical oversight.

As LLMs continue to evolve, they promise to become indispensable tools for advancing our understanding of biology and improving human health, heralding a new era of discovery in bioinformatics.

What are Large Language Models (LLMs) and why are they gaining attention in bioinformatics?

Large Language Models (LLMs) are advanced neural network models, like the GPT variants, that are trained on vast amounts of text data. They can generate human-like text and perform various tasks via a natural language interface. LLMs have attracted considerable interest in bioinformatics because they offer a versatile platform for addressing diverse problems, such as identifying coding regions in DNA, extracting gene and protein names from text, detecting antimicrobial and anti-cancer peptides, optimizing molecules for drug discovery, and resolving complex bioinformatics problems, and are seen as a potentially superior alternative to domain-specific models.

What kind of bioinformatics tasks were evaluated using LLMs in this research?

The study evaluated LLMs on a wide range of bioinformatics tasks, which included:

- Identifying Potential Coding Regions: Locating sections of DNA sequences that code for proteins.

- Identifying Antimicrobial Peptides (AMPs): Determining if a given peptide sequence has antimicrobial properties.

- Identifying Anti-cancer Peptides (ACPs): Evaluating if a peptide sequence has anti-cancer properties.

- Molecule Optimization: Modifying a given molecule to improve properties like lipophilicity, synthetic accessibility, and drug-likeness while preserving its basic structure.

- Gene and Protein Named Entity Recognition: Extracting gene and protein names from scientific literature.

- Educational Bioinformatics Problem Solving: Answering bioinformatics problems that encompass string algorithms, combinatorics, dynamic programming, alignment, phylogeny, probability, and graph algorithms.

FAQS Large Language Models in Bioinformatics

How did the researchers approach evaluating LLMs for these bioinformatics tasks?

The researchers framed the bioinformatics tasks as natural language processing problems. They converted biological data, such as DNA and protein sequences, and chemical compounds into text format and then fed them into the LLMs along with carefully designed prompts. The LLMs were then tasked with generating predictions based on the input text and prompts. The performance was evaluated based on comparing the LLM outputs to established baselines and actual results. In some cases, the study fine-tuned the LLMs on domain-specific data to improve performance.

What did the study find about LLMs in identifying potential coding regions?

The study found that LLMs, particularly GPT-4, can identify potential coding regions (CDS) from DNA sequences by recognizing start and stop codons. GPT-4 was also capable of identifying the longest CDS when a step-by-step “thought-chain” approach to the prompt was used. Additionally, GPT-4 demonstrated the ability to provide an effective algorithm to identify coding regions. Llama 2 (70B), however, showed very poor performance on this task. Google Bard was able to suggest use of tools for this and provide a functional algorithm on request.

How well did LLMs perform on peptide identification tasks (AMPs and ACPs) and what methods were used?

LLMs performed well on peptide identification. The researchers fine-tuned a GPT-3.5 model (Davinci-ft) to identify antimicrobial peptides (AMPs) and anti-cancer peptides (ACPs). When compared to other machine-learning models and protein language models, like AMP-BERT and ESM, the fine-tuned GPT-3.5 model showed superior results, achieving the highest accuracy on the training datasets and a strong performance on the test datasets. The model demonstrated a good ability to distinguish between positive and negative instances in these imbalanced sets.

How did the LLMs fare when tasked with molecule optimization?

GPT-4 demonstrated good performance in molecule optimization by generating valid SMILES (Simplified Molecular-Input Line-Entry System) strings and improving metrics like synthetic accessibility (SA) and drug-likeness (QED). However, GPT-4 fell short compared to a dedicated molecule optimization model, Modof, when it came to improving the octanol-water partition coefficient (logP). GPT-4 tended to make more conservative modifications, often removing charged groups or small fragments, rather than making more extensive structural changes. The study indicates that GPT-4 has a good grasp of basic chemistry but may need further training to achieve superior performance in certain tasks in this area.

What were the main challenges encountered by LLMs when used for named-entity recognition (NER) of genes and proteins?

The main challenges that LLMs encountered in gene and protein named-entity recognition included:

- Missing entities: LLMs often missed some gene or protein name mentions in sentences.

- Misunderstanding gene names: LLMs sometimes failed to recognize that certain multi-word phrases were a single entity name, treating them as multiple entities.

- Overall performance: GPT-3.5 had poor performance in this task compared to fine-tuned domain-specific models. GPT-4 fared better but still did not perform as well as other models used for NER.

How did the LLMs perform on the educational bioinformatics problem-solving tasks?

LLMs showed mixed performance in this task. GPT-4 performed better than GPT-3.5 across all types of problems with higher success rates. Both models showed better accuracy on combinatorics problems but had difficulty with probability-related and more complex logical problems. GPT-3.5 demonstrated good performance on simpler problems but gave incorrect results for the complex ones. GPT-4 could solve many problems correctly, including the steps for complex problems, but it could make errors on the final answer. This shows the potential of the models for such tasks, but reveals the current limitations for more difficult logical problems.

Glossary of Key Terms

Large Language Model (LLM): A type of artificial intelligence model that is trained on large amounts of text data, enabling it to generate human-like text, translate languages, and perform other language-related tasks.

Bioinformatics: An interdisciplinary field that combines biology, computer science, and statistics to analyze and interpret biological data, such as DNA sequences and protein structures.

Prompt: The input text given to an LLM to initiate a specific response. The quality of the prompt is critical to achieving accurate and relevant results.

Coding Sequence (CDS): A region of DNA or RNA that contains instructions for making a protein, specifically by coding for a sequence of amino acids.

Open Reading Frame (ORF): A sequence of DNA that starts with a start codon (usually ATG) and ends with a stop codon (TAA, TAG, or TGA). This segment has the potential to code for a protein.

Antimicrobial Peptide (AMP): A short chain of amino acids that has the ability to kill or inhibit the growth of microorganisms, such as bacteria and fungi.

Anti-cancer Peptide (ACP): A peptide that is designed to target and kill cancer cells. Often these peptides have mechanisms that target cancer cells and not healthy cells.

Molecular Optimization: The process of modifying a molecule’s structure to enhance certain properties, such as its binding affinity, solubility, or drug-likeness.

SMILES String: A text-based notation for representing chemical structures, often used in computational chemistry and drug discovery.

Named Entity Recognition (NER): A natural language processing task that involves identifying and categorizing named entities in text, such as names of genes, proteins, and other biological entities.

Rosalind: A web-based educational platform used for learning bioinformatics and programming. It provides practice problems in the form of computational challenges.

F1-Score: A metric used to assess the accuracy of a model by considering both its precision and recall scores; a higher F1-score indicates a better balance between these two.

Precision: A metric used to assess how accurate the positive predictions of a model are; a high precision value means the model is accurate when it predicts something to be true.

Recall: A metric used to assess how many of the true positives the model correctly predicts; a high recall value means the model is good at finding all the true positives.

LogP (Octanol-Water Partition Coefficient): A measure of a molecule’s lipophilicity, or its ability to dissolve in fats rather than water. This metric is important in drug development.

Synthetic Accessibility (SA): A metric that quantifies the ease with which a molecule can be synthesized. This is important in drug discovery because molecules need to be practical to produce.

QED (Quantitative Estimate of Drug-likeness): A metric that measures how similar a molecule is to known drugs, based on its physicochemical properties.

LLMs in Bioinformatics Research: A Study Guide

Quiz

Instructions: Answer each question in 2-3 sentences.

- What are the three main stages of training for Large Language Models (LLMs), according to the paper?

- Besides medical education, what are two other areas where the paper indicates that the applicability of LLMs has been explored?

- How does the paper argue that its research goes “deeper” than other works on LLMs in bioinformatics?

- What is a coding sequence (CDS), and why is its identification important in the context of viral sequences?

- What is the primary mechanism through which antimicrobial peptides (AMPs) are described to work?

- What two properties of molecules does the molecule optimization section of the study attempt to balance?

- What makes gene and protein named entity extraction challenging, according to the study?

- What does the Rosalind platform offer in the context of this study, and what does it contain?

- According to the study, what is one advantage that GPT-4 seems to have over Modof in molecule optimization?

- What are the three metrics used to assess gene and protein named entity recognition in the paper?

Quiz Answer Key

- The three main stages are unsupervised pre-training, supervised fine-tuning, and human feedback. These stages allow LLMs to generate fluent and contextually relevant conversations from text-based input.

- The applicability of LLMs has also been explored in areas such as reasoning, machine translation, and question answering tasks. These explorations demonstrate the versatility of LLMs beyond traditional natural language processing.

- The authors claim their work is more in-depth and explores more complicated and typical bioinformatics tasks, while other works focus on preliminary explorations or educational settings for beginners. Their study evaluates more complex, practical scenarios.

- A coding sequence (CDS) is a region of DNA or RNA that contains essential information for protein encoding. Identifying CDS is crucial because it helps in understanding a virus’s biological characteristics, as well as DNA and gene expression.

- Antimicrobial peptides (AMPs) typically work by interfering with vital biological components, such as cell membranes and DNA replication mechanisms. They exterminate bacteria and other hazardous organisms.

- The molecule optimization section attempts to enhance the octanol-water partition coefficient (logP), and simultaneously considers penalties based on synthetic accessibility (SA). This is to make optimized compounds more relevant to drug development.

- Gene and protein named entity extraction is challenging due to the variety of alternative names used in scientific literature and databases, and because new biological entities are continuously being discovered. The diversity of gene and protein nomenclatures can confuse recognition algorithms.

- The Rosalind platform is an educational resource used for learning bioinformatics and programming. The platform provides a problem-solving environment and the study used 105 questions from Rosalind to test the models’ abilities.

- GPT-4 is capable of articulating the modifications it makes to a molecule, and providing rationales for these alterations, while Modof acts as a “black box.” GPT-4 also has an advantage in improving synthetic accessibility and drug-likeness metrics.

- The three metrics used are Precision (P), Recall (R), and F1-Score (F). These metrics help assess the accuracy and effectiveness of the gene and protein named entity recognition.

Essay Questions

Instructions: Answer the following essay questions, drawing on the source material, in a well-organized essay format.

- Discuss the broader implications of the findings in the paper for the field of bioinformatics. How might LLMs reshape future research methodologies and practices in this field?

- The study identifies both strengths and weaknesses of LLMs in the context of bioinformatics tasks. Analyze and discuss these findings, providing concrete examples from the paper.

- Critically evaluate the comparative methods used in this study. How did the study demonstrate the strengths and weaknesses of LLMs in each task? In your response, consider the other language models and tools against which LLMs were compared.

- The study emphasizes the importance of “appropriate prompts” when working with LLMs. Explain this concept in your own terms, and discuss how the study used it across different tasks, focusing on the prompt used for the “identifying coding regions” task.

- The study includes the molecule optimization as an assessment of LLM abilities. Why is this inclusion useful, and what does this task contribute to the argument of the overall study?