Quick Guide Tutorial: Challenges in Big Data and Bioinformatics

October 13, 2023Quick Guide Tutorial: Challenges in Big Data and Bioinformatics – Handling and Analyzing Terabytes of Biological Data

The vast proliferation of data in the modern age has given rise to new terminologies, methodologies, and fields of study. Among these, two prominent fields that have seen significant attention are Big Data and Bioinformatics. This introduction seeks to provide a concise definition of these terms and briefly explore the challenges associated with handling extensive biological datasets.

Big Data: At its core, Big Data refers to datasets that are so voluminous and complex that traditional data-processing application software is insufficient to handle them. Such data can be derived from myriad sources, including business transactions, social media, and sensors used in the Internet of Things (IoT). Big Data is characterized by its high volume, velocity, and variety.

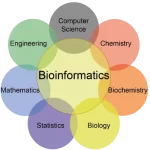

Bioinformatics: Bioinformatics is an interdisciplinary field that leverages computational methods to analyze and interpret biological data. It encompasses the development and application of computational tools and techniques to manage, analyze, and visualize biological data, especially molecular biology data like DNA, RNA, and protein sequences.

Challenges in handling large-scale biological data: Handling vast biological datasets poses several unique challenges:

- Data Volume: The sheer volume of data generated by modern biological experiments, such as next-generation sequencing (NGS), can be overwhelming. Storage becomes a primary concern, as does the time required for data transfer and backup.

- Data Quality: Biological data often comes with noise, errors, or missing values. Pre-processing to clean and standardize the data is crucial.

- Data Complexity: Unlike some other types of data, biological data is highly heterogeneous, encompassing everything from DNA sequences to protein structures to metabolic pathways. This diversity makes integration and comparison challenging.

- Computational Challenges: Many bioinformatics algorithms, especially those used in tasks like genome assembly or phylogenetic tree construction, are computationally intensive, requiring powerful hardware and optimized software.

- Data Security and Privacy: As with medical data, biological data often needs to be handled with care to ensure privacy, especially when dealing with human genetic information.

- Interoperability: With a plethora of bioinformatics tools and databases available, ensuring that they can work seamlessly together is a formidable challenge.

In conclusion, while Big Data and Bioinformatics offer exciting opportunities for advancements in science and medicine, they also present numerous challenges. Addressing these challenges requires a multidisciplinary approach, combining expertise in biology, computer science, statistics, and more.

- What is Big Data in Bioinformatics?

Bioinformatics sits at the intersection of biology and computational science, and as such, it grapples with a vast and ever-growing volume of biological data. The term “Big Data” in bioinformatics primarily refers to the extensive datasets produced by modern biological techniques and technologies.

Data Volume in Modern Biology:

- Genomics: With the advent of next-generation sequencing (NGS) technologies, the cost of sequencing an entire human genome has plummeted, leading to an explosion in genomic data. The Human Genome Project, completed in 2003, took over a decade and cost billions of dollars. In contrast, today, an entire genome can be sequenced within days for a fraction of the cost. This accessibility means that thousands of genomes are being sequenced daily, leading to petabytes of data.

- Proteomics: Proteomics, the study of the entire set of proteins expressed by a genome, also produces vast amounts of data. Mass spectrometry-based proteomics can identify and quantify thousands of proteins in a single experiment. Given the dynamic nature of the proteome, multiple experiments under different conditions are often required, multiplying the data generated.

- Transcriptomics: This field studies the complete set of RNA transcripts produced by the genome under specific conditions. Like genomics and proteomics, modern transcriptomic techniques, such as RNA-seq, produce vast datasets that capture the dynamic nature of gene expression.

- Metabolomics and Phenomics: These fields study the complete set of metabolites and phenotypes, respectively, in a biological organism. Advanced analytical techniques like nuclear magnetic resonance (NMR) and mass spectrometry generate comprehensive datasets in these domains.

Significance of Managing and Analyzing This Data:

- Precision Medicine: By analyzing individual genomic data, healthcare professionals can tailor treatments and interventions to the individual patient, ensuring optimal outcomes.

- Understanding Disease Mechanisms: With large-scale biological data, researchers can identify patterns and associations that might be missed in smaller datasets. This understanding can lead to the discovery of novel disease markers or therapeutic targets.

- Drug Discovery and Development: Big Data allows for the identification of potential drug targets and the prediction of drug responses in different populations, streamlining the drug development process.

- Evolutionary and Population Studies: Large datasets enable scientists to trace evolutionary patterns, study genetic diversity, and understand population dynamics at an unprecedented scale.

- Data-driven Hypotheses: Instead of the traditional hypothesis-driven approach, vast biological datasets allow for data-driven research, where patterns and insights derived from the data itself can guide scientific inquiry.

In essence, Big Data in bioinformatics is not just about the sheer volume of data but also about the potential insights and advancements that this data can facilitate. Proper management and sophisticated analysis of this data are paramount to unlocking its full potential and advancing the fields of biology and medicine.

- Challenges in Handling Big Biological Data

a. Data Storage

Modern biological research, particularly in the realms of genomics, proteomics, and other ‘-omics’ fields, produces an unprecedented amount of data. Handling, storing, and accessing this vast amount of information is not a trivial task and presents several challenges.

Scale of Biological Data:

- Magnitude: It’s not uncommon for a single high-throughput experiment, such as next-generation sequencing (NGS), to produce data ranging from gigabytes (GB) to terabytes (TB). For instance, sequencing a human genome can result in around 100 GB to 200 GB of raw data. When considering projects like the 1000 Genomes Project or large-scale population studies, the data quickly scales to petabytes (PB) and beyond.

- Growth Rate: The volume of biological data is growing at an exponential rate. As technologies improve and become more accessible, more data is generated. The rapid decline in sequencing costs means that more genomes are being sequenced than ever before, contributing to the data deluge.

Storage Infrastructure Challenges:

- Cloud vs. On-Premises:

- Cloud Storage: Cloud solutions, like AWS, Google Cloud, and Azure, offer scalable storage solutions. However, transferring large datasets to the cloud can be time-consuming and costly. Additionally, there are concerns about data security and privacy, especially when dealing with sensitive genetic data.

- On-Premises Storage: Local storage solutions offer more control over data and often faster retrieval times. However, they require significant upfront investment in infrastructure. As data scales, expanding storage capacity can become challenging, and there’s always the risk of data loss due to hardware failures.

- Data Retrieval Speed: Fast and efficient data access is crucial, especially when analyzing large datasets. Delays in data retrieval can significantly slow down research and analysis. Factors affecting data retrieval include the type of storage (e.g., solid-state drives vs. hard disk drives), network bandwidth, and the efficiency of data indexing and organization.

- Cost: Both cloud and on-premises storage come with costs. While cloud storage might seem economical initially, costs can rise quickly with increased data transfer and storage needs. On-premises solutions have high upfront costs but might be more economical in the long run for institutions generating massive amounts of data regularly.

- Data Redundancy and Backup: Given the value and irreplaceability of biological data, ensuring data redundancy (keeping multiple copies) and regular backups is essential. This requirement further increases the storage needs and presents challenges in synchronization and data integrity.

In conclusion, while modern biology’s data-intensive nature offers unparalleled insights and discoveries, it also presents significant challenges in data storage. Addressing these challenges requires a combination of technological advancements, efficient data management strategies, and interdisciplinary collaboration.

One of the significant challenges in bioinformatics and computational biology is integrating data from various sources. This challenge is particularly pressing given the diverse nature of biological data, spanning from genomic sequences to protein structures and metabolic pathways.

Combining Data from Various Sources:

- Diverse Data Types: Each ‘-omics’ field (like genomics, transcriptomics, proteomics, and metabolomics) provides a different layer of information about the organism. While genomics offers insights into the genetic blueprint, transcriptomics and proteomics provide details about gene expression and protein functions, and metabolomics offers a snapshot of the metabolic state of the cell. Integrating these datasets can provide a holistic view of an organism’s biology.

- Temporal and Spatial Differences: Data might be collected at different time points (e.g., before and after a treatment) or from various tissues or cell types. Integrating such data requires careful alignment to ensure that comparisons are meaningful.

- Variability in Experimental Conditions: Even slight differences in experimental conditions, such as temperature, reagents used, or equipment, can lead to variations in the data. This variability can pose challenges when trying to integrate or compare datasets from different experiments or labs.

Standardization and Data Format Challenges:

- Lack of Standardization: Different labs or researchers might use various protocols, equipment, or software, leading to inconsistencies in the data. For instance, two labs might use different genome annotations or protein databases, complicating direct comparison.

- Diverse Data Formats: Biological data can come in a plethora of formats. For genomics alone, there are formats like FASTQ for raw sequence data, BAM/SAM for aligned sequences, and VCF for genetic variants. Each ‘-omics’ field has its own set of commonly used formats, and tools might require specific formats as input.

- Metadata and Annotations: Properly annotating the data with relevant metadata (e.g., experimental conditions, sample source) is crucial for data integration. However, the lack of standardized metadata formats or incomplete annotations can hinder data integration efforts.

- Data Transformation: Often, raw data needs to be transformed or processed before integration. This transformation might include normalization, filtering, or other preprocessing steps. Ensuring that these steps are consistent and reproducible across datasets is essential.

- Semantic Differences: Even when data is in a standardized format, semantic differences can arise. For instance, gene names or identifiers might differ between databases or annotation versions, requiring mapping or translation efforts.

To address these challenges, several initiatives and consortia aim to standardize biological data formats and annotations. Tools and platforms are being developed to facilitate data integration, but the rapidly evolving nature of biological research ensures that data integration remains a dynamic and complex challenge.

Data Quality and Cleaning

In the realm of bioinformatics, ensuring data quality is paramount. With vast amounts of data being generated, it’s inevitable that inconsistencies, errors, and other quality issues will arise. Addressing these issues is essential for drawing accurate and meaningful conclusions from the data.

Issues of Data Inconsistency, Missing Values, Noise:

- Data Inconsistency: This can result from various sources, such as equipment malfunctions, human errors during data entry, or differences in experimental protocols across labs. For instance, a gene might be annotated differently in two separate databases, leading to inconsistencies when referencing it.

- Missing Values: During data collection, some values might be missing due to reasons like equipment failures, sample degradation, or data corruption. In genomics, certain regions of the genome might be difficult to sequence, leading to gaps or missing data.

- Noise: Biological data, especially those obtained from high-throughput experiments, often come with a certain degree of noise. This noise can arise from various sources, including random errors during sequencing, background signals in imaging experiments, or contamination of samples.

Importance of Preprocessing and Data Validation:

- Ensuring Accuracy: Cleaning and preprocessing data are crucial steps to ensure that subsequent analyses are based on accurate and high-quality data. Erroneous data can lead to misleading results, wasted resources, and incorrect conclusions.

- Improving Statistical Power: Noise and inconsistencies can increase the variability in the data, reducing the statistical power of subsequent analyses. By cleaning the data, one can increase the chances of detecting true patterns and associations.

- Data Imputation: For missing values, various imputation techniques can be applied to estimate the missing data based on the information available. This process, however, needs to be approached with caution to avoid introducing biases.

- Normalization: Especially in the context of high-throughput experiments, data from different samples or batches might need to be normalized to make them comparable. This step ensures that observed differences are due to actual biological variations and not technical artifacts.

- Quality Control Metrics: Before and after preprocessing, it’s crucial to assess data quality using various metrics. For example, in sequencing experiments, metrics like read depth, base quality scores, and alignment rates can provide insights into data quality.

- Validation: It’s often beneficial to validate certain aspects of the data, especially if they are critical to the study’s conclusions. This validation can be done using independent experiments, alternative measurement techniques, or by comparing with established databases or benchmarks.

In conclusion, while modern biological techniques offer unprecedented insights into the intricacies of life, they also come with challenges related to data quality. Addressing these challenges through rigorous preprocessing and validation is essential to harness the full potential of big biological data.

d. Computational Challenges

The vast and intricate nature of biological data requires sophisticated computational approaches to manage, analyze, and interpret. As bioinformatics endeavors to derive meaningful insights from this deluge of data, it encounters a series of computational challenges.

High-Performance Computing Needs:

- Intensive Calculations: Many bioinformatics tasks, such as sequence alignment, structural modeling, and phylogenetic analysis, involve computationally intensive algorithms. For instance, aligning a new genome sequence against a reference genome or conducting a molecular dynamics simulation for protein folding necessitates significant computational power.

- Memory Demands: Some bioinformatics algorithms are memory-intensive. For example, assembling a genome from millions of short DNA sequences or constructing large-scale phylogenetic trees requires substantial RAM.

- Storage and I/O Operations: The sheer volume of biological data means that reading from and writing to storage devices become critical operations. Efficient storage solutions and fast I/O operations are vital to prevent bottlenecks.

Parallel Processing and Optimization:

- Parallelization: Given the independent nature of many bioinformatics tasks (e.g., analyzing different genes or processing multiple samples), parallel processing becomes a natural solution. By distributing tasks across multiple processors or compute nodes, the overall computation time can be significantly reduced.

- GPU Acceleration: Graphics Processing Units (GPUs) offer a large number of cores optimized for parallel operations. Several bioinformatics applications, especially those related to molecular dynamics simulations or deep learning, have been optimized to leverage the parallel processing capabilities of GPUs.

- Algorithm Optimization: As biological data continues to grow, there’s a constant need to refine and optimize algorithms. Even minor efficiency improvements can lead to significant time savings when processing large datasets.

- Distributed Computing: Some tasks are so computationally demanding that they need to be distributed across multiple machines or even clusters. Frameworks like Hadoop and Spark allow for distributed processing of large datasets, enabling tasks like genome-wide association studies (GWAS) or metagenomic analyses to be conducted on massive scales.

- Scalability: As datasets grow, it’s essential that computational solutions can scale accordingly. This scalability ensures that today’s solutions remain relevant as the volume and complexity of biological data increase.

In summary, the computational challenges in bioinformatics are multifaceted, stemming from both the volume and the complexity of the data. Addressing these challenges requires a combination of hardware advancements, algorithmic innovations, and software optimization. As biology continues its transition into a data-intensive science, the importance of computational solutions in bioinformatics will only continue to grow.

- Big Data Analysis in Bioinformatics

a. Algorithmic Challenges

The analysis of big biological data requires algorithms that are both efficient and accurate. As the volume and complexity of biological data grow, the algorithms traditionally used in bioinformatics face new challenges and need to adapt to the demands of modern datasets.

Need for Efficient Algorithms to Process Large Datasets:

- Time Complexity: Traditional algorithms, which might have been sufficient for smaller datasets, can become prohibitively slow when applied to big data. For instance, a quadratic-time algorithm, which was acceptable for aligning short DNA sequences, becomes infeasible for aligning whole genomes. Thus, there’s a need for algorithms with better time complexities.

- Accuracy vs. Efficiency Trade-off: Often, there’s a trade-off between the accuracy of an algorithm and its computational efficiency. With big data, this trade-off becomes more pronounced. While heuristic methods might provide faster results, ensuring they maintain an acceptable level of accuracy is crucial.

- Streaming Algorithms: With the continuous generation of biological data, there’s an increasing interest in algorithms that can process data in a streaming manner, i.e., analyzing data on-the-fly as it’s generated, without the need for storing the entire dataset.

Scalability Concerns:

- Dynamic Nature of Biological Data: Biological data is not static. New sequences are continuously added to databases, and experimental conditions or biological samples can introduce variability. Algorithms need to be scalable to accommodate this ever-growing and dynamic nature of data.

- Parallel and Distributed Computing: To achieve scalability, algorithms must be designed or adapted to leverage parallel and distributed computing infrastructures. This adaptation means decomposing problems in a way that allows independent or semi-independent processing of data chunks.

- Data Structures: Efficient data structures, like suffix trees, bloom filters, and hash tables, play a crucial role in achieving scalability. These structures allow for faster data retrieval, reduced memory footprint, and more efficient computations.

- Multiscale Analysis: Biological processes operate at various scales, from molecular interactions to whole-organism phenotypes. Algorithms need to be scalable across these different biological scales, enabling integrative analyses that capture the complexity of biological systems.

In conclusion, the algorithmic challenges posed by big data in bioinformatics are substantial. Addressing these challenges requires a blend of theoretical computer science, practical software engineering, and a deep understanding of biology. The goal is to develop algorithms that not only scale with the data but also provide meaningful and accurate insights into the underlying biological processes.

c. Visualization Challenges

Visualization plays a critical role in bioinformatics, aiding researchers in understanding complex biological data, identifying patterns, and drawing meaningful conclusions. However, the vast scale and intricacy of big biological data introduce several challenges in its visualization.

Importance of Visualizing Big Data for Insights:

- Data Interpretation: Visual representations provide an intuitive means to understand and interpret data, making complex patterns and relationships more discernible.

- Pattern Recognition: Humans are inherently good at recognizing visual patterns. Proper visualization can highlight anomalies, trends, or clusters in the data that might be missed in raw numerical formats.

- Decision Making: Visual insights can guide researchers in making informed decisions, whether it’s selecting a specific gene for further study or identifying potential drug targets.

- Communication: Visualization tools provide an effective means to communicate findings to both scientific peers and non-experts. A well-designed visualization can convey complex ideas in an accessible manner.

Tools and Techniques for Effective Visualization:

- Interactive Visualization: Given the multidimensional nature of biological data, static visuals often fall short. Interactive visualization tools, where users can zoom, pan, or query specific data points, are becoming essential. Tools like UCSC Genome Browser or Cytoscape allow users to interactively explore genomic data and biological networks, respectively.

- Heatmaps: These are widely used in bioinformatics, especially for visualizing gene expression data. Heatmaps can represent vast amounts of data in a compact form, highlighting clusters or gradients in the data.

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) or t-Distributed Stochastic Neighbor Embedding (t-SNE) reduce the dimensionality of the data, making it more visually interpretable. These methods are particularly useful for visualizing high-dimensional data like gene expression profiles.

- Genome Browsers: These tools allow researchers to visualize various genomic data types (e.g., DNA sequences, gene annotations, and epigenetic marks) aligned to a reference genome. They provide a contextual view, helping researchers understand how different data layers intersect.

- Network Visualization: Many biological phenomena, like protein-protein interactions or gene regulatory networks, can be represented as networks. Tools like Cytoscape allow for the visualization and analysis of these complex networks.

- Hierarchical Visualization: Techniques like dendrograms or cladograms are used to visualize hierarchical relationships, often seen in phylogenetic studies or hierarchical clustering analyses.

- Scalability in Visualization Tools: As datasets grow, visualization tools must be optimized to handle the increased scale without compromising performance. Techniques like data sampling, aggregation, or multi-level visual representations can be employed to maintain responsiveness.

- Integration of Multiple Data Types: Modern biology often involves multi-omics studies, where data from genomics, proteomics, metabolomics, etc., are collected for the same samples. Integrated visualization tools that can overlay and juxtapose these different data types are essential for comprehensive insights.

In summary, while visualization provides a powerful means to understand and communicate biological data, the sheer scale and complexity of big data in bioinformatics demand innovative and scalable visualization techniques. Proper visualization not only makes the data accessible but also facilitates hypothesis generation and scientific discovery.

- Solutions and Best Practices

a. Data Management Platforms

As the volume and diversity of biological data continue to grow, data management platforms play a crucial role in efficiently organizing, storing, and accessing this information. Here’s an introduction to some popular platforms and databases in the field of bioinformatics, along with their features and benefits.

Popular Platforms and Databases:

- NCBI (National Center for Biotechnology Information): NCBI is a comprehensive resource that hosts a wide range of biological databases, including GenBank (for genomic sequences), PubMed (for scientific literature), and databases for proteins, genes, and more. It provides tools for data retrieval, analysis, and visualization.

- Ensembl: Ensembl is a genome browser and data resource that offers genomic data for a variety of species. It provides a user-friendly interface for exploring genome annotations, gene expression data, and comparative genomics information.

- UCSC Genome Browser: This browser allows users to visualize and explore genomic data for various organisms. It offers a wealth of data tracks, including gene annotations, epigenetic marks, and comparative genomics data. Users can also upload and analyze their data.

- UniProt: UniProt is a central hub for protein sequence and functional information. It provides comprehensive protein data, including sequences, annotations, and cross-references to other databases. It’s a valuable resource for protein-centric research.

- KEGG (Kyoto Encyclopedia of Genes and Genomes): KEGG is a database that integrates genomic, chemical, and functional information. It’s particularly useful for pathway analysis and understanding the biological functions of genes and proteins.

Features and Benefits of Integrated Platforms:

- Centralized Data: Integrated platforms consolidate data from various sources, ensuring that researchers have access to comprehensive datasets in one place. This reduces the need to search and navigate multiple databases separately.

- Data Standardization: Many integrated platforms enforce data standardization and curation, ensuring that the data is consistent and reliable. This is crucial for accurate analysis and interpretation.

- Cross-Referencing: Integrated platforms often provide cross-references to other databases, allowing researchers to trace data back to its original source or explore related information easily.

- User-Friendly Interfaces: These platforms typically offer user-friendly web interfaces, query tools, and visualization options, making it easier for researchers to access and explore the data.

- Data Analysis Tools: Some integrated platforms provide built-in analysis tools, such as sequence alignment, gene expression analysis, or pathway enrichment analysis, allowing users to perform analyses directly within the platform.

- Customization and Data Upload: Many platforms allow users to upload and analyze their data alongside the integrated datasets. This flexibility is essential for researchers working with proprietary or unique datasets.

- Community Support: Integrated platforms often have active user communities and support forums, where researchers can seek help, share insights, and collaborate with others in the field.

- Data Accessibility: Most integrated platforms are freely accessible to the scientific community, promoting open access to biological data and fostering collaboration.

- Regular Updates: Integrated platforms are regularly updated with new data and features, ensuring that researchers have access to the latest information and tools.

In conclusion, data management platforms and integrated databases are indispensable tools in bioinformatics, providing researchers with centralized access to a wealth of biological data. These platforms simplify data retrieval, analysis, and visualization, facilitating scientific discovery and advancing our understanding of biology.

b. Cloud Computing in Bioinformatics

Cloud computing has transformed the field of bioinformatics, offering a range of benefits that have revolutionized the way researchers manage, analyze, and share biological data. Below, we explore the advantages of cloud-based solutions in bioinformatics and discuss cost considerations and scalability.

Benefits of Cloud-Based Solutions:

- Scalability: Cloud providers offer virtually limitless computing and storage resources. Researchers can easily scale up or down based on their needs, making it ideal for handling large biological datasets without investing in expensive infrastructure.

- Accessibility: Cloud-based platforms provide remote access to computational resources and data, allowing researchers to work from anywhere with an internet connection. This accessibility promotes collaboration among scientists worldwide.

- Cost-Efficiency: While there are costs associated with cloud services, they often prove more cost-effective than building and maintaining on-premises infrastructure. Users pay only for the resources they consume, eliminating the need for upfront hardware investments.

- Data Integration: Cloud environments facilitate the integration of diverse data sources and formats. Researchers can centralize data from various experiments, databases, and sources, making it easier to analyze and compare.

- Data Security and Compliance: Leading cloud providers invest heavily in security measures, including data encryption, access controls, and compliance certifications. This enhances data security and ensures compliance with privacy regulations when handling sensitive biological data.

- Collaboration: Cloud-based platforms support real-time collaboration, enabling multiple researchers to work on the same project simultaneously. Features like shared data storage, collaborative analysis tools, and version control enhance teamwork.

- High-Performance Computing (HPC): Many cloud providers offer access to powerful HPC resources, allowing researchers to run computationally intensive bioinformatics analyses efficiently. This is particularly valuable for tasks like genome assembly, molecular dynamics simulations, or large-scale data processing.

- Data Backup and Disaster Recovery: Cloud providers typically offer automated data backup and disaster recovery solutions, reducing the risk of data loss due to hardware failures or other unforeseen events.

Cost Considerations and Scalability:

- Pay-as-You-Go Model: Cloud computing follows a pay-as-you-go model, where users are billed based on their actual resource consumption. This model allows researchers to control costs by adjusting resource allocation as needed.

- Cost Optimization: Researchers should optimize their cloud resource usage to minimize costs. This includes using cost calculators, employing auto-scaling strategies, and terminating unused resources when not needed.

- Reserved Instances: Cloud providers offer options for purchasing reserved instances, which can result in cost savings compared to on-demand pricing for long-term projects.

- Resource Monitoring: Utilizing cloud monitoring tools helps track resource usage and identify opportunities for cost reduction. Researchers can set up alerts to prevent unexpected cost overruns.

- Scalability: Cloud platforms provide flexibility to scale up or down in response to changing workloads. Researchers can use autoscaling features to automatically adjust the number of computing resources based on demand.

- Resource Selection: Choosing the right type of cloud instances (e.g., virtual machines optimized for compute, memory, or GPU) based on the specific bioinformatics tasks can optimize performance and costs.

In summary, cloud computing has become a game-changer in bioinformatics, offering cost-effective, scalable, and secure solutions for managing and analyzing large biological datasets. Researchers can leverage these cloud-based platforms to accelerate their research, collaborate more effectively, and access cutting-edge computational resources without the need for substantial upfront investments. Proper cost management and scalability planning are essential to reap the full benefits of cloud-based bioinformatics solutions.

c. Open Source and Collaborative Tools

Open-source tools and collaborative development have played a pivotal role in advancing bioinformatics, especially in handling big biological data. Let’s explore the importance of the open-source community and some popular open-source tools and libraries used in big data bioinformatics.

Role of the Open-Source Community:

- Accessibility: Open-source software is freely available to the scientific community, removing financial barriers to access critical tools. This accessibility promotes widespread adoption and democratizes access to cutting-edge bioinformatics solutions.

- Transparency: Open-source software is transparent, allowing researchers to examine the source code, understand how algorithms work, and validate results. This transparency enhances trust and reproducibility in bioinformatics research.

- Collaboration: Open-source projects often involve contributions from a diverse group of developers, researchers, and bioinformaticians worldwide. This collaborative effort leads to the development of robust and versatile tools.

- Customization: Researchers can modify open-source tools to suit their specific research needs. This adaptability ensures that bioinformatics solutions can be tailored to address unique challenges in diverse biological studies.

- Community Support: Open-source projects typically have active user communities and support forums where users can seek help, share insights, report issues, and collaborate on software improvements.

Popular Open-Source Tools and Libraries for Big Data Bioinformatics:

- Bioconductor: Bioconductor is an open-source project that provides a comprehensive suite of tools, packages, and libraries for the analysis and visualization of high-throughput genomics data. It supports various bioinformatics tasks, including gene expression analysis, genomics, and pathway analysis.

- SAMtools: SAMtools is a widely used open-source software suite for manipulating and processing sequencing data in the SAM/BAM format. It offers tools for alignment, variant calling, and data format conversion.

- Bedtools: Bedtools is an open-source suite of utilities for manipulating genomic intervals, including operations like intersection, merging, and set operations. It’s valuable for a wide range of genomic analyses.

- GATK (Genome Analysis Toolkit): Developed by the Broad Institute, GATK is an open-source toolkit for variant discovery in high-throughput sequencing data. It includes best practices workflows for genomics analysis.

- Docker and Singularity: While not tools specific to bioinformatics, containerization platforms like Docker and Singularity have become indispensable for reproducible and portable bioinformatics analysis. They allow researchers to package and share analysis pipelines and dependencies.

- Hadoop and Spark: These open-source big data frameworks are not bioinformatics-specific but have been adapted for large-scale bioinformatics data processing. They enable distributed data processing and analysis on cloud or cluster environments.

- Bioinformatics Libraries in Python and R: Python and R offer a wealth of open-source libraries and packages for bioinformatics analysis, including NumPy, SciPy, BioPython, and Bioconductor packages in R. These libraries provide tools for data manipulation, statistical analysis, and visualization.

- Bioinformatics Workflows: Workflow management systems like Snakemake and Nextflow are open-source tools that allow researchers to define and execute complex bioinformatics workflows, ensuring reproducibility and scalability.

- Jupyter Notebooks: Jupyter is an open-source platform that supports interactive and collaborative data analysis. Researchers in bioinformatics often use Jupyter Notebooks to document and share their analyses.

These open-source tools and libraries represent just a fraction of the bioinformatics ecosystem. The collaborative efforts of the open-source community continue to drive innovation and empower researchers to tackle the challenges posed by big biological data. Open source not only accelerates scientific discovery but also promotes transparency and reproducibility in bioinformatics research.

Case Study 1: Genomic Sequencing and Data Storage

Challenge: A genomics research lab was conducting large-scale whole-genome sequencing experiments. They faced challenges in managing and storing the massive amounts of sequencing data generated, which ranged from terabytes to petabytes.

Solution: The lab opted for a cloud-based storage solution, leveraging the scalability of cloud storage services like Amazon S3 or Google Cloud Storage. They also implemented data compression techniques to reduce storage costs. To process the data, they used cloud-based high-performance computing (HPC) clusters for scalability.

Outcome: The cloud-based storage solution allowed the lab to efficiently store and access their genomic data. It provided the flexibility to scale storage capacity as needed. By using cloud-based HPC, they could process the data in parallel, significantly reducing analysis time. This approach enabled the lab to handle large-scale genomic studies more cost-effectively and accelerate their research.

Case Study 2: Integrative Multi-Omics Analysis

Challenge: A research consortium aimed to integrate multi-omics data (genomics, transcriptomics, proteomics, and metabolomics) to study the molecular mechanisms of a complex disease. They faced challenges in harmonizing and analyzing data from different platforms and labs.

Solution: The consortium adopted open-source tools and pipelines for data integration, such as Bioconductor packages in R and Python libraries. They defined data standards and formats for metadata and data annotations. Collaborative platforms were used to facilitate data sharing and analysis.

Outcome: By integrating multi-omics data, the consortium gained a comprehensive view of the disease mechanisms. They identified key biomarkers and pathways associated with the disease, which could lead to potential therapeutic targets. The use of open-source tools and standardized data formats ensured data consistency and reproducibility across different research sites.

Case Study 3: Metagenomics Analysis

Challenge: A microbiome research project involved analyzing metagenomic data from environmental samples, resulting in terabytes of shotgun sequencing data. The team needed to perform taxonomic classification and functional annotation on this data.

Solution: The researchers used cloud-based metagenomics analysis platforms that offered scalable computational resources. They employed open-source metagenomics analysis pipelines like QIIME 2 and MG-RAST. The results were visualized using interactive tools like Krona charts.

Outcome: The cloud-based approach allowed the team to efficiently process and analyze the large metagenomic datasets. They identified diverse microbial communities and functional profiles in the environmental samples. The interactive visualizations helped communicate the findings to both scientific and non-scientific audiences, facilitating the project’s impact and outreach.

These case studies illustrate how bioinformatics researchers have tackled big data challenges in diverse contexts. By leveraging cloud computing, open-source tools, and collaborative approaches, they have overcome data storage, integration, and analysis hurdles. The outcomes include accelerated research, valuable insights, and enhanced reproducibility in the field of bioinformatics.

Future Trends and Opportunities in Bioinformatics

Predictive Analytics and Its Role in Bioinformatics:

Predictive analytics, a field that uses data, statistical algorithms, and machine learning techniques to identify the likelihood of future outcomes based on historical data, is poised to play a pivotal role in the future of bioinformatics.

1. Disease Prediction and Personalized Medicine:

- Predictive analytics can analyze large-scale genomics, transcriptomics, and clinical data to predict disease susceptibility and progression. This information can lead to the development of personalized medicine approaches tailored to an individual’s genetic makeup, reducing adverse effects and improving treatment outcomes.

2. Drug Discovery and Target Identification:

- Predictive models can identify potential drug candidates by analyzing chemical structures, biological interactions, and historical drug data. This accelerates drug discovery processes, potentially reducing costs and timeframes.

- Predictive analytics can uncover novel biomarkers associated with diseases, aiding in early diagnosis and treatment monitoring. This can have a significant impact on healthcare and diagnostics.

4. Drug Response Prediction:

- Personalized treatment plans can be developed by predicting how a patient will respond to specific medications based on their genetic and molecular profiles. This minimizes the trial-and-error approach in drug selection.

Future Challenges and Areas of Growth:

While the future of bioinformatics holds great promise, it also presents several challenges and areas of growth:

1. Data Integration and Standardization:

- Integrating diverse omics data (genomics, proteomics, metabolomics, etc.) and clinical data remains a challenge. Standardization efforts and robust data integration platforms are needed.

2. Ethical and Privacy Concerns:

- As predictive analytics becomes more prevalent in healthcare, ensuring the ethical use of patient data and addressing privacy concerns will be crucial.

3. Interpretability and Explainability:

- Making predictive models in bioinformatics interpretable and explainable is vital for gaining trust and acceptance among healthcare professionals and regulatory bodies.

4. Scalable and Cost-Effective Solutions:

- Bioinformatics analyses often require significant computational resources. Scalable, cost-effective cloud solutions and HPC infrastructures will be essential.

5. Cross-Disciplinary Collaboration:

- Encouraging collaboration between bioinformaticians, biologists, clinicians, and data scientists is essential for tackling complex healthcare challenges effectively.

6. Artificial Intelligence and Deep Learning:

- Advanced AI techniques, including deep learning, will continue to evolve and offer new ways to extract insights from complex biological data.

7. Longitudinal Data Analysis:

- Analyzing longitudinal data over time can provide a deeper understanding of disease progression and the impact of treatments.

- Developing methods for integrating multiple omics data types to gain a holistic view of biological systems will remain a focus.

In conclusion, predictive analytics in bioinformatics is poised to drive transformative changes in healthcare and life sciences. However, addressing challenges related to data integration, ethics, and interpretation is crucial for realizing the full potential of predictive analytics in improving human health and advancing scientific knowledge.

Conclusion

In the realm of big data bioinformatics, the challenges are substantial, but so are the solutions. Let’s recap the key challenges and the proactive approaches needed to handle the future influx of biological data.

Challenges in Big Data Bioinformatics:

- Data Volume: The massive volume of biological data, spanning genomics, proteomics, and more, presents storage, processing, and analysis challenges.

- Data Integration: Combining data from diverse sources and standardizing formats are essential for comprehensive analysis.

- Data Quality and Cleaning: Inconsistent, noisy, or missing data can lead to erroneous results. Data preprocessing and validation are critical.

- Computational Challenges: High-performance computing, parallel processing, and optimization are required to process large-scale data efficiently.

- Visualization Challenges: Effective visualization is necessary for understanding complex data, but it can be challenging with big data.

- Data Management: Managing and accessing large datasets efficiently is crucial, necessitating scalable solutions.

Solutions and Proactive Approaches:

- Cloud Computing: Leverage cloud-based solutions for scalable storage and computation, reducing infrastructure costs.

- Open Source Tools: Rely on open-source bioinformatics tools and libraries for transparent and collaborative research.

- Predictive Analytics: Utilize predictive analytics for disease prediction, drug discovery, and personalized medicine.

- Data Integration Platforms: Invest in data integration platforms that can harmonize diverse omics data and clinical information.

- Ethical Data Use: Prioritize ethical use and privacy protection when handling patient data.

- Interpretability: Develop interpretable predictive models to gain trust and facilitate clinical adoption.

- Collaboration: Foster cross-disciplinary collaboration between bioinformaticians, biologists, clinicians, and data scientists.

- Standardization: Establish data standards and formats to ensure data consistency and interoperability.

- Education and Training: Invest in training programs to equip researchers with the skills needed to handle big data effectively.

- Longitudinal Analysis: Embrace longitudinal data analysis for deeper insights into disease progression and treatment response.

- Multi-Omics Integration: Develop methods for integrating multiple omics data types to obtain a holistic view of biological systems.

As we stand on the threshold of an era of unprecedented biological data generation, proactive approaches and innovative solutions are essential. By addressing the challenges, embracing new technologies, and fostering collaboration, we can harness the power of big data bioinformatics to advance our understanding of biology, improve healthcare, and drive scientific discovery.

Practice Exercise 1: Dataset – Gene Expression Data

Dataset Description: You have a gene expression dataset containing the expression levels of thousands of genes for multiple samples. Each row represents a gene, and each column represents a sample.

Exercise:

- Calculate the mean expression level for each gene.

- Identify genes that have significantly different expression levels between two groups of samples (e.g., control vs. treatment) using a statistical test.

Solution/Explanation:

- To calculate the mean expression level for each gene, sum the expression values for each gene and divide by the number of samples.

import pandas as pd# Load the gene expression data into a DataFrame

data = pd.read_csv("gene_expression_data.csv")

# Calculate the mean expression for each gene

mean_expression = data.mean(axis=1)

- To identify genes with significantly different expression levels between two groups, you can use a t-test or a non-parametric test like the Wilcoxon rank-sum test. Here’s an example using the t-test:

from scipy.stats import ttest_ind# Split the data into two groups (e.g., control and treatment)

control_group = data[control_samples]

treatment_group = data[treatment_samples]

# Perform a t-test for each gene

t_stats, p_values = ttest_ind(control_group, treatment_group)

# Select genes with significant differences (e.g., p-value < 0.05)

significant_genes = data.index[p_values < 0.05]

Practice Exercise 2: Dataset – Clinical Data

Dataset Description: You have clinical data from a medical study, including patient demographics, disease diagnoses, and treatment outcomes.

Exercise:

- Calculate the average age of patients in the study.

- Identify the most common disease diagnosis in the dataset.

- Calculate the overall treatment success rate (percentage of patients who had a positive treatment outcome).

Solution/Explanation:

- To calculate the average age of patients, you can use the mean function on the age column.

import pandas as pd# Load the clinical data into a DataFrame

data = pd.read_csv("clinical_data.csv")

# Calculate the average age

average_age = data["age"].mean()

- To identify the most common disease diagnosis, you can use the

value_counts()function on the diagnosis column.

# Find the most common diagnosis

most_common_diagnosis = data["diagnosis"].value_counts().idxmax()

- To calculate the treatment success rate, count the number of patients with positive treatment outcomes and divide by the total number of patients.

# Calculate treatment success rate

success_rate = (data["treatment_outcome"] == "positive").mean() * 100

These exercises provide hands-on experience with real data and test your understanding of data manipulation and analysis, which are common challenges in big data bioinformatics.