Data Science for Beginners: A Comprehensive Guide with a Focus on Bioinformatics

January 11, 2024Embark on your data science journey with our comprehensive tutorial, exploring the fundamentals, machine learning, and real-world applications in bioinformatics.

I. Introduction to Data Science

A. Definition and Scope of Data Science

Data Science is a multidisciplinary field that combines various techniques, processes, algorithms, and systems to extract valuable insights and knowledge from structured and unstructured data. It encompasses a broad range of skills and methodologies, including statistics, machine learning, data analysis, and domain-specific expertise. The primary objective of data science is to uncover patterns, trends, correlations, and other valuable information that can aid in decision-making, problem-solving, and the development of data-driven strategies.

- Definition of Data Science: Data Science can be defined as the study of extracting meaningful information, knowledge, and insights from large and complex datasets. It involves the application of various scientific methods, processes, algorithms, and systems to analyze, interpret, and visualize data.

- Scope of Data Science: The scope of data science is vast and continues to evolve with advancements in technology and data analytics. Key components of the scope include:

a. Data Collection: Gathering relevant and comprehensive datasets from various sources, including structured databases, unstructured text, sensor data, and more.

b. Data Cleaning and Preprocessing: Ensuring data quality by handling missing values, outliers, and inconsistencies. Preprocessing involves transforming raw data into a format suitable for analysis.

c. Exploratory Data Analysis (EDA): Investigating and visualizing data to identify patterns, trends, and anomalies. EDA helps in understanding the characteristics of the dataset.

d. Statistical Analysis: Applying statistical methods to draw inferences and validate findings. This may involve hypothesis testing, regression analysis, and other statistical techniques.

e. Machine Learning: Developing and deploying algorithms that enable computers to learn patterns from data and make predictions or decisions without explicit programming. Machine learning is a core aspect of data science.

f. Data Visualization: Communicating insights effectively through graphical representations. Visualization tools help in presenting complex information in a clear and understandable manner.

g. Big Data Analytics: Handling and analyzing large volumes of data, often in real-time, using distributed computing frameworks. Big data technologies like Hadoop and Spark play a crucial role in this aspect.

h. Domain Expertise: Integrating subject matter expertise into the analysis process to ensure that the results are meaningful and applicable in specific industries or domains.

i. Ethics and Privacy: Considering ethical implications and ensuring the responsible use of data, including privacy concerns and compliance with regulations.

j. Predictive Modeling and Optimization: Building models to make predictions about future events or optimize decision-making processes based on historical data.

As businesses and industries increasingly recognize the importance of data-driven decision-making, the demand for skilled data scientists continues to grow, making data science a dynamic and essential field in the modern era.

B. Key Components of Data Science

Data Science involves a variety of components and processes that collectively contribute to the extraction of meaningful insights from data. These components can be broadly categorized into the following key areas:

- Data Collection:

- Data Sourcing: Gathering data from diverse sources, such as databases, APIs, web scraping, sensors, logs, and more.

- Data Ingestion: Importing and integrating collected data into a storage system for further processing.

- Data Cleaning and Preprocessing:

- Data Cleaning: Identifying and handling missing values, outliers, and errors to ensure data quality.

- Data Transformation: Converting raw data into a suitable format for analysis and modeling.

- Exploratory Data Analysis (EDA):

- Descriptive Statistics: Summarizing and describing key characteristics of the dataset.

- Data Visualization: Creating visual representations to explore patterns, trends, and relationships within the data.

- Statistical Analysis:

- Hypothesis Testing: Formulating and testing hypotheses to make inferences about the population from which the data is sampled.

- Regression Analysis: Examining relationships between variables and predicting outcomes based on statistical models.

- Machine Learning:

- Supervised Learning: Training models on labeled data to make predictions or classifications.

- Unsupervised Learning: Identifying patterns and structures in data without predefined labels.

- Feature Engineering: Selecting and transforming relevant features to enhance model performance.

- Model Evaluation: Assessing the performance of machine learning models using metrics like accuracy, precision, recall, and F1 score.

- Data Visualization:

- Charts and Graphs: Creating visual representations, such as scatter plots, bar charts, and heatmaps, to convey insights effectively.

- Dashboards: Building interactive dashboards for real-time monitoring and decision support.

- Big Data Technologies:

- Hadoop and Spark: Handling and processing large volumes of data using distributed computing frameworks.

- NoSQL Databases: Storing and retrieving unstructured or semi-structured data efficiently.

- Domain Expertise:

- Industry Knowledge: Integrating domain-specific expertise to contextualize findings and make informed business decisions.

- Ethics and Privacy:

- Data Governance: Implementing policies and procedures to ensure ethical data use and compliance with privacy regulations.

- Fairness and Bias Detection: Addressing and mitigating biases in data and models to ensure equitable outcomes.

- Predictive Modeling and Optimization:

- Algorithm Selection: Choosing appropriate algorithms based on the nature of the problem and data.

- Optimization Techniques: Improving processes and decision-making through the application of optimization methods.

These components work together in a cyclical manner, with data scientists iteratively refining and enhancing their analyses to derive deeper insights and improve decision-making processes. The dynamic nature of data science requires continuous learning and adaptation to stay abreast of new tools, techniques, and developments in the field.

C. Importance of Data Science in Today’s World

Data Science plays a pivotal role in various aspects of contemporary society, business, and research. Its importance stems from the transformative impact it has on decision-making processes, innovation, and problem-solving. Here are key reasons highlighting the significance of Data Science in today’s world:

- Informed Decision Making:

- Data Science empowers organizations to make data-driven decisions by extracting valuable insights from large and complex datasets. This leads to more informed and strategic decision-making processes.

- Business Intelligence and Strategy:

- Organizations leverage data science to gain a competitive edge through the analysis of market trends, customer behavior, and industry dynamics. This helps in formulating effective business strategies and responding to changing market conditions.

- Innovation and Product Development:

- Data Science contributes to innovation by identifying opportunities for improvement and uncovering patterns that can lead to the development of new products and services. It facilitates a culture of continuous improvement and creativity.

- Optimizing Operations and Efficiency:

- By analyzing operational data, organizations can identify inefficiencies, streamline processes, and optimize resource allocation. This results in improved efficiency, reduced costs, and better overall performance.

- Personalization and Customer Experience:

- Data Science enables personalized customer experiences by analyzing customer preferences, behaviors, and feedback. Businesses can tailor their products, services, and marketing strategies to individual customer needs, enhancing overall satisfaction.

- Healthcare and Life Sciences:

- In healthcare, Data Science is used for disease prediction, drug discovery, patient diagnosis, and personalized medicine. It contributes to advancements in medical research and facilitates more accurate and timely healthcare interventions.

- Fraud Detection and Security:

- Data Science plays a crucial role in identifying and preventing fraudulent activities by analyzing patterns and anomalies in transactional data. This is essential in financial services, e-commerce, and various other industries to ensure the security of transactions and systems.

- Urban Planning and Smart Cities:

- Cities utilize data science to analyze urban patterns, traffic flows, and resource utilization for effective urban planning. The concept of smart cities leverages data for improved infrastructure, energy efficiency, and citizen services.

- Scientific Research and Exploration:

- Data Science is instrumental in scientific research, ranging from climate studies and astronomy to genetics and physics. It facilitates data analysis, simulation modeling, and the interpretation of complex scientific phenomena.

- Social Impact and Policy Planning:

- Governments and non-profit organizations use Data Science to address social issues, plan public policies, and allocate resources more efficiently. This contributes to better governance and social development.

- Continuous Learning and Adaptation:

- Data Science promotes a culture of continuous learning and adaptation as new data sources, algorithms, and technologies emerge. This dynamic nature ensures that organizations stay relevant and resilient in the face of evolving challenges.

In summary, Data Science is a driving force behind innovation, efficiency, and progress across various domains, making it an indispensable tool in today’s interconnected and data-rich world.

D. Career Opportunities in Data Science

Data Science has emerged as a rapidly growing field with a wide range of career opportunities across industries. The demand for skilled professionals in this field continues to outpace the supply, making it an attractive and lucrative career path. Here are some key career opportunities in Data Science:

- Data Scientist:

- Data Scientists are responsible for extracting insights from data using statistical analysis, machine learning, and data modeling techniques. They develop predictive models, algorithms, and conduct exploratory data analysis to support decision-making.

- Data Analyst:

- Data Analysts focus on interpreting and analyzing data to provide actionable insights. They work with data visualization tools, conduct statistical analysis, and communicate findings to help organizations make informed decisions.

- Machine Learning Engineer:

- Machine Learning Engineers design, develop, and deploy machine learning models and algorithms. They work on creating systems that can learn and make predictions or decisions without explicit programming.

- Big Data Engineer:

- Big Data Engineers specialize in handling and processing large volumes of data using distributed computing frameworks like Hadoop and Spark. They design and maintain systems for efficient data storage and retrieval.

- Business Intelligence (BI) Developer:

- BI Developers focus on creating tools and systems for collecting, analyzing, and visualizing business data. They often work with BI tools to provide organizations with actionable business intelligence.

- Data Architect:

- Data Architects design and create data systems and structures to ensure efficient data storage, retrieval, and analysis. They play a crucial role in defining data architecture strategies and standards.

- Statistician:

- Statisticians apply statistical methods to analyze and interpret data. They work on designing experiments, collecting and analyzing data, and drawing conclusions that inform decision-making processes.

- Data Engineer:

- Data Engineers build the infrastructure for data generation, transformation, and storage. They design, construct, test, and maintain data architectures (e.g., databases, large-scale processing systems).

- Quantitative Analyst:

- Quantitative Analysts, often found in finance and investment sectors, use mathematical models to analyze financial data and make predictions about market trends. They contribute to risk management and investment strategies.

- Data Science Consultant:

- Data Science Consultants work with various clients to help them solve specific business problems using data-driven approaches. They offer expertise in data analysis, model development, and strategic decision-making.

- Research Scientist (Data Science):

- Research Scientists in Data Science focus on advancing the field through academic research. They contribute to the development of new algorithms, models, and methodologies.

- AI (Artificial Intelligence) Engineer:

- AI Engineers work on developing systems that exhibit intelligent behavior. They may specialize in natural language processing, computer vision, or other AI-related domains.

- IoT (Internet of Things) Data Scientist:

- With the increasing prevalence of IoT devices, professionals in this role focus on analyzing data generated by interconnected devices to derive insights and optimize processes.

- Health Data Scientist:

- Health Data Scientists work in the healthcare sector, applying data science techniques to analyze medical data, predict disease outbreaks, and contribute to personalized medicine.

- Educator/Trainer in Data Science:

- Professionals with expertise in Data Science can pursue careers in education and training, helping the next generation of data scientists develop their skills.

These career opportunities highlight the diverse and interdisciplinary nature of Data Science, offering roles for individuals with backgrounds in mathematics, statistics, computer science, engineering, and other related fields. The field is dynamic, and professionals often find themselves at the forefront of technological advancements and innovation. Continuous learning and staying updated with industry trends are essential for a successful career in Data Science.

II. Getting Started with Data Science

A. Setting up Your Data Science Environment

Setting up a robust data science environment is crucial for conducting effective analyses and building models. The environment should include the necessary tools, programming languages, and libraries for data manipulation, analysis, and visualization. Here’s a step-by-step guide to setting up your data science environment:

- Choose a Programming Language:

- Common choices for data science include Python and R. Python, with libraries like NumPy, pandas, and scikit-learn, is widely used for its versatility and extensive support in the data science community.

- Install Python and/or R:

- If you choose Python, install the Anaconda distribution, which comes with popular data science libraries pre-installed. For R, you can install R and RStudio, a popular integrated development environment (IDE) for R.

- Set Up a Virtual Environment:

- Consider creating a virtual environment to isolate your data science projects. Tools like

virtualenvfor Python orrenvfor R allow you to manage dependencies and versions for different projects. - Example for Python:bash

pip install virtualenv

virtualenv myenv

source myenv/bin/activate # On Windows: .\myenv\Scripts\activate

- Example for R (using RStudio):R

install.packages("renv")

library(renv)

renv::init()

- Consider creating a virtual environment to isolate your data science projects. Tools like

- Choose an Integrated Development Environment (IDE):

- Popular IDEs for Python include Jupyter Notebooks, VSCode, and PyCharm. For R, RStudio is a widely used IDE. Choose one that suits your preferences and workflow.

- Install Data Science Libraries:

- For Python, install essential libraries using:bash

pip install numpy pandas scikit-learn matplotlib seaborn

- For R, install libraries using:R

install.packages(c("tidyverse", "caret", "ggplot2"))

- For Python, install essential libraries using:

- Version Control:

- Set up a version control system like Git to track changes in your code and collaborate with others. Platforms like GitHub, GitLab, or Bitbucket provide remote repositories for your projects.

- Learn Basic Command Line Usage:

- Familiarize yourself with basic command line operations. It will help you navigate directories, manage files, and run commands efficiently.

- Explore Data Science Platforms:

- Platforms like Kaggle, Google Colab, or JupyterHub provide online environments with pre-installed data science tools. They can be useful for learning and experimenting without local installations.

- Document Your Work:

- Use tools like Jupyter Notebooks or R Markdown to document your analyses and code. Good documentation is essential for reproducibility and collaboration.

- Explore Cloud Services:

- Consider using cloud services like AWS, Google Cloud, or Microsoft Azure for scalable computing power and storage. Many data science tools and platforms integrate seamlessly with cloud services.

- Join Data Science Communities:

- Engage with the data science community through forums, social media, and meetups. Platforms like Stack Overflow, Reddit (r/datascience), and LinkedIn have active data science communities.

- Continuous Learning:

- Data science is a dynamic field, so stay updated with the latest developments. Follow blogs, attend webinars, and participate in online courses to enhance your skills.

By following these steps, you’ll establish a solid foundation for your data science environment, allowing you to efficiently work on projects, collaborate with others, and stay connected with the broader data science community.

To install Python and Jupyter Notebooks, you can follow these steps. Note that these instructions assume you’re using a Windows operating system. If you are using macOS or Linux, the steps may be slightly different.

Step 1: Install Python

- Download Python: Visit the official Python website (https://www.python.org/downloads/) and download the latest version of Python. Make sure to check the box that says “Add Python to PATH” during installation.

- Install Python: Run the downloaded installer and follow the installation wizard. This will install Python on your system.

- Verify Installation: Open a command prompt (search for “cmd” or “Command Prompt” in the Start menu) and type the following command to check if Python is installed:bash

python --version

You should see the Python version number, indicating a successful installation.

Step 2: Install Jupyter Notebooks

- Install Jupyter via pip: Open the command prompt and run the following command to install Jupyter using the Python package manager, pip:bash

pip install jupyter

- Start Jupyter Notebook: After installation, you can start Jupyter Notebook by typing the following command in the command prompt:bash

jupyter notebook

This will open a new tab in your web browser with the Jupyter Notebook dashboard.

- Create a New Notebook:

- In the Jupyter Notebook dashboard, click on the “New” button and select “Python 3” to create a new Python notebook.

Now, you have Python and Jupyter Notebooks installed on your system. You can start using Jupyter Notebooks to write and execute Python code in an interactive environment.

Optional: Use Anaconda Distribution

Alternatively, you can use the Anaconda distribution, which is a popular distribution for Python that comes with Jupyter Notebooks and many other data science libraries pre-installed.

- Download Anaconda: Visit the Anaconda website (https://www.anaconda.com/products/individual) and download the latest version of Anaconda for your operating system.

- Install Anaconda: Run the downloaded installer and follow the installation instructions. During installation, you can choose to add Anaconda to your system PATH.

- Open Anaconda Navigator: After installation, you can open Anaconda Navigator from the Start menu and launch Jupyter Notebook from there.

Using Anaconda can be convenient, especially for managing different Python environments and installing additional data science packages.

Now you’re ready to start working with Python and Jupyter Notebooks for your data science projects!

Let’s provide a brief overview of three popular data science libraries in Python: NumPy, Pandas, and Matplotlib.

1. NumPy:

Purpose:

- Numerical Computing: NumPy is a powerful library for numerical operations in Python. It provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays efficiently.

Key Features:

- Arrays: The fundamental data structure in NumPy is the ndarray (n-dimensional array), which allows for efficient manipulation of large datasets.

- Mathematical Operations: NumPy provides a wide range of mathematical functions for operations like linear algebra, statistical analysis, random number generation, and more.

- Broadcasting: Broadcasting is a powerful feature that allows operations on arrays of different shapes and sizes without the need for explicit loops.

Example:

import numpy as np# Creating a NumPy array

arr = np.array([1, 2, 3, 4, 5])

# Performing mathematical operations

mean_value = np.mean(arr)

2. Pandas:

Purpose:

- Data Manipulation and Analysis: Pandas is a data manipulation library that simplifies data analysis tasks. It provides data structures like DataFrame for efficient manipulation and analysis of structured data.

Key Features:

- DataFrame: A two-dimensional, tabular data structure with labeled axes (rows and columns).

- Data Cleaning: Pandas helps in handling missing data, reshaping datasets, merging and joining datasets, and more.

- Data Selection and Indexing: Intuitive methods for selecting and indexing data within DataFrames.

- Time Series Analysis: Pandas provides support for time series data and datetime manipulations.

Example:

import pandas as pd# Creating a Pandas DataFrame

data = {'Name': ['Alice', 'Bob', 'Charlie'],

'Age': [25, 30, 22]}

df = pd.DataFrame(data)

# Selecting data

average_age = df['Age'].mean()

3. Matplotlib:

Purpose:

- Data Visualization: Matplotlib is a comprehensive library for creating static, animated, and interactive visualizations in Python. It provides a wide variety of plotting options for conveying insights from data.

Key Features:

- Plots and Charts: Matplotlib supports various plot types, including line plots, scatter plots, bar plots, histograms, and more.

- Customization: Users can customize the appearance of plots, including colors, labels, titles, and annotations.

- Multiple Subplots: Create multiple subplots within a single figure for side-by-side visualizations.

- Integration with Jupyter Notebooks: Matplotlib seamlessly integrates with Jupyter Notebooks for interactive plotting.

Example:

import matplotlib.pyplot as plt# Creating a simple line plot

x = [1, 2, 3, 4, 5]

y = [2, 4, 6, 8, 10]

plt.plot(x, y, label='Linear Function')

plt.xlabel('X-axis')

plt.ylabel('Y-axis')

plt.title('Simple Line Plot')

plt.legend()

plt.show()

These libraries form the foundation of many data science and machine learning projects in Python. Combining NumPy for numerical operations, Pandas for data manipulation, and Matplotlib for data visualization provides a powerful toolkit for working with and analyzing datasets.

B. Understanding Data Types and Data Structures

Data types and data structures are fundamental concepts in programming and data science. They determine how data is represented, stored, and manipulated in a programming language. In Python, which is widely used in data science, there are several built-in data types and structures. Let’s explore some of the key ones:

1. Data Types:

a. Numeric Types:

- int: Integer data type (e.g., 5, -10).

- float: Floating-point data type (e.g., 3.14, -0.5).

Example:

integer_var = 10

float_var = 3.14

b. Boolean Type:

- bool: Represents boolean values (True or False).

Example:

is_true = True

is_false = False

c. String Type:

- str: Represents textual data enclosed in single or double quotes.

Example:

text = "Hello, Data Science!"

d. List Type:

- list: Ordered, mutable collection of elements.

Example:

my_list = [1, 2, 3, "apple", True]

e. Tuple Type:

- tuple: Ordered, immutable collection of elements.

Example:

my_tuple = (1, 2, 3, "banana", False)

f. Set Type:

- set: Unordered collection of unique elements.

Example:

my_set = {1, 2, 3, "orange", True}

g. Dictionary Type:

- dict: Unordered collection of key-value pairs.

Example:

my_dict = {"name": "John", "age": 25, "city": "New York"}

2. Data Structures:

a. Arrays (NumPy):

- numpy.ndarray: Multidimensional arrays for numerical computing.

Example:

import numpy as npmy_array = np.array([[1, 2, 3], [4, 5, 6]])

b. DataFrames (Pandas):

- pandas.DataFrame: Two-dimensional, labeled data structure for data manipulation and analysis.

Example:

import pandas as pddata = {"Name": ["Alice", "Bob", "Charlie"],

"Age": [25, 30, 22]}

df = pd.DataFrame(data)

c. Series (Pandas):

- pandas.Series: One-dimensional labeled array, a single column of a DataFrame.

Example:

import pandas as pdages = pd.Series([25, 30, 22], name="Age")

Understanding these data types and structures is crucial for effective data manipulation, analysis, and visualization in data science. They provide the building blocks for organizing and processing information in a way that facilitates meaningful insights and informed decision-making.

C. Basic Data Manipulation and Exploration Techniques

Data manipulation and exploration are essential steps in the data science workflow. They involve tasks such as cleaning, transforming, and summarizing data to gain insights and prepare it for analysis. In Python, the Pandas library is commonly used for these tasks. Let’s explore some basic techniques:

1. Data Loading:

import pandas as pd# Load a CSV file into a DataFrame

df = pd.read_csv('your_data.csv')

# Display the first few rows of the DataFrame

print(df.head())

2. Data Exploration:

a. Descriptive Statistics:

# Display summary statistics

print(df.describe())# Count unique values in a column

print(df['column_name'].value_counts())

b. Data Information:

# Display basic information about the DataFrame

print(df.info())

3. Data Selection and Filtering:

# Select specific columns

selected_columns = df[['column1', 'column2']]# Filter rows based on conditions

filtered_data = df[df['column_name'] > 50]

4. Handling Missing Data:

# Check for missing values

print(df.isnull().sum())# Drop rows with missing values

df = df.dropna()

# Fill missing values with a specific value

df['column_name'].fillna(value, inplace=True)

5. Data Aggregation:

# Group by a column and calculate mean

grouped_data = df.groupby('category_column')['numeric_column'].mean()

6. Data Visualization:

import matplotlib.pyplot as plt

import seaborn as sns# Create a histogram

plt.hist(df['numeric_column'], bins=20, color='skyblue', edgecolor='black')

plt.title('Histogram of Numeric Column')

plt.xlabel('Value')

plt.ylabel('Frequency')

plt.show()

# Create a boxplot

sns.boxplot(x='category_column', y='numeric_column', data=df)

plt.title('Boxplot of Numeric Column by Category')

plt.show()

7. Data Transformation:

# Create a new column based on existing columns

df['new_column'] = df['column1'] + df['column2']# Apply a function to a column

df['column_name'] = df['column_name'].apply(lambda x: x**2)

8. Sorting Data:

# Sort the DataFrame by a column

df_sorted = df.sort_values(by='column_name', ascending=False)

9. Merging DataFrames:

# Merge two DataFrames based on a common column

merged_df = pd.merge(df1, df2, on='common_column', how='inner')

10. Exporting Data:

# Export DataFrame to a CSV file

df.to_csv('output_data.csv', index=False)

These basic techniques form the foundation for more advanced data manipulation and analysis tasks in data science. As you become more familiar with these operations, you’ll be better equipped to handle diverse datasets and extract meaningful insights from them.

III. Fundamentals of Statistics for Data Science

A. Descriptive Statistics

Descriptive statistics involve summarizing, organizing, and describing the main features of a dataset. These statistics provide a concise overview of the essential characteristics of the data, allowing data scientists to understand and interpret the information. Here are some key concepts in descriptive statistics:

1. Measures of Central Tendency:

a. Mean:

- The average value of a dataset, calculated by summing all values and dividing by the number of observations.

import numpy as npdata = [1, 2, 3, 4, 5]

mean_value = np.mean(data)

b. Median:

- The middle value of a sorted dataset. If the dataset has an even number of observations, the median is the average of the two middle values.

import numpy as npdata = [1, 2, 3, 4, 5]

median_value = np.median(data)

c. Mode:

- The value(s) that appear most frequently in a dataset.

from scipy import statsdata = [1, 2, 2, 3, 4, 4, 5]

mode_value = stats.mode(data).mode[0]

2. Measures of Dispersion:

a. Range:

- The difference between the maximum and minimum values in a dataset.

data = [1, 2, 3, 4, 5]

data_range = max(data) - min(data)

b. Variance:

- A measure of the spread of data points around the mean.

import numpy as npdata = [1, 2, 3, 4, 5]

variance_value = np.var(data)

c. Standard Deviation:

- The square root of the variance, providing a more interpretable measure of data spread.

import numpy as npdata = [1, 2, 3, 4, 5]

std_deviation_value = np.std(data)

3. Measures of Shape:

a. Skewness:

- A measure of the asymmetry of the probability distribution of a real-valued random variable.

from scipy.stats import skewdata = [1, 2, 3, 4, 5]

skewness_value = skew(data)

b. Kurtosis:

- A measure of the “tailedness” of the probability distribution of a real-valued random variable.

from scipy.stats import kurtosisdata = [1, 2, 3, 4, 5]

kurtosis_value = kurtosis(data)

4. Percentiles:

- Values that divide a dataset into 100 equal parts. The median is the 50th percentile.

data = [1, 2, 3, 4, 5]

p_25 = np.percentile(data, 25) # 25th percentile

Descriptive statistics are essential for gaining a preliminary understanding of a dataset before diving into more complex analyses. They help identify patterns, outliers, and general trends in the data, laying the groundwork for further exploration and modeling in data science.

B. Inferential Statistics

Inferential statistics involves making inferences and predictions about a population based on a sample of data from that population. These statistical techniques allow data scientists to draw conclusions, make predictions, and test hypotheses about broader populations. Here are key concepts in inferential statistics:

1. Sampling:

a. Random Sampling:

- Ensuring that every member of a population has an equal chance of being included in the sample.

b. Stratified Sampling:

- Dividing the population into subgroups (strata) and then randomly sampling from each subgroup.

c. Cluster Sampling:

- Dividing the population into clusters and randomly selecting entire clusters for the sample.

2. Estimation:

a. Point Estimation:

- Estimating a population parameter using a single value, typically the sample mean or proportion.

b. Interval Estimation (Confidence Intervals):

- Providing a range of values within which the true population parameter is likely to fall, along with a confidence level.

import scipy.stats as statsdata = [1, 2, 3, 4, 5]

confidence_interval = stats.norm.interval(0.95, loc=np.mean(data), scale=stats.sem(data))

3. Hypothesis Testing:

a. Null Hypothesis (H0):

- A statement that there is no significant difference or effect.

b. Alternative Hypothesis (H1):

- A statement that contradicts the null hypothesis, suggesting a significant difference or effect.

c. Significance Level (α):

- The probability of rejecting the null hypothesis when it is true. Common values include 0.05 and 0.01.

d. p-value:

- The probability of obtaining results as extreme as the observed results, assuming the null hypothesis is true. A smaller p-value suggests stronger evidence against the null hypothesis.

from scipy.stats import ttest_indgroup1 = [1, 2, 3, 4, 5]

group2 = [6, 7, 8, 9, 10]

t_stat, p_value = ttest_ind(group1, group2)

e. Type I and Type II Errors:

- Type I Error (False Positive): Incorrectly rejecting a true null hypothesis.

- Type II Error (False Negative): Failing to reject a false null hypothesis.

4. Regression Analysis:

a. Simple Linear Regression:

- Modeling the relationship between a dependent variable and a single independent variable.

from scipy.stats import linregressx = [1, 2, 3, 4, 5]

y = [2, 4, 5, 4, 5]

slope, intercept, r_value, p_value, std_err = linregress(x, y)

b. Multiple Linear Regression:

- Extending simple linear regression to model the relationship between a dependent variable and multiple independent variables.

import statsmodels.api as smx = sm.add_constant(x) # Add a constant term for intercept

model = sm.OLS(y, x).fit()

5. Analysis of Variance (ANOVA):

- A statistical method for comparing means across multiple groups to determine if there are any statistically significant differences.

from scipy.stats import f_onewaygroup1 = [1, 2, 3, 4, 5]

group2 = [6, 7, 8, 9, 10]

group3 = [11, 12, 13, 14, 15]

f_stat, p_value = f_oneway(group1, group2, group3)

Inferential statistics plays a crucial role in data science by allowing data scientists to make predictions, generalize findings, and draw meaningful conclusions from samples to broader populations. These techniques help in validating hypotheses, identifying patterns, and making informed decisions based on data.

C. Probability Distributions

Probability distributions describe the likelihood of different outcomes in a random experiment or process. In data science, understanding probability distributions is essential for making predictions, estimating uncertainties, and performing statistical analyses. Here are some common probability distributions used in data science:

1. Discrete Probability Distributions:

a. Bernoulli Distribution:

- A distribution for a binary outcome (success/failure) with a probability of success (p).

from scipy.stats import bernoullip = 0.3

rv = bernoulli(p)

b. Binomial Distribution:

- Describes the number of successes in a fixed number of independent Bernoulli trials.

from scipy.stats import binomn = 5 # Number of trials

p = 0.3 # Probability of success

rv = binom(n, p)

c. Poisson Distribution:

- Models the number of events occurring in a fixed interval of time or space.

from scipy.stats import poissonlambda_ = 2 # Average rate of events per interval

rv = poisson(lambda_)

2. Continuous Probability Distributions:

a. Normal (Gaussian) Distribution:

- Describes a continuous symmetric distribution with a bell-shaped curve.

from scipy.stats import normmean = 0

std_dev = 1

rv = norm(loc=mean, scale=std_dev)

b. Uniform Distribution:

- Assumes all values in a given range are equally likely.

from scipy.stats import uniforma = 0 # Lower limit

b = 1 # Upper limit

rv = uniform(loc=a, scale=b-a)

c. Exponential Distribution:

- Models the time between events in a Poisson process.

from scipy.stats import exponlambda_ = 0.5 # Rate parameter

rv = expon(scale=1/lambda_)

d. Log-Normal Distribution:

- Describes a distribution of a random variable whose logarithm is normally distributed.

from scipy.stats import lognormsigma = 0.1 # Standard deviation of the natural logarithm

rv = lognorm(sigma)

e. Gamma Distribution:

- Generalizes the exponential distribution and models the waiting time until a Poisson process reaches a certain number of events.

from scipy.stats import gammak = 2 # Shape parameter

theta = 1 # Scale parameter

rv = gamma(k, scale=theta)

Understanding these probability distributions is crucial for modeling and analyzing different types of data. Whether dealing with discrete or continuous data, probability distributions help data scientists make informed decisions and predictions based on the inherent uncertainty in real-world processes.

D. Hypothesis Testing

Hypothesis testing is a statistical method used to make inferences about population parameters based on sample data. It involves formulating a hypothesis, collecting data, and using statistical tests to determine whether to accept or reject the null hypothesis. Here are the key steps and concepts in hypothesis testing:

1. Key Concepts:

a. Null Hypothesis (H0):

- A statement that there is no significant difference or effect in the population.

b. Alternative Hypothesis (H1):

- A statement that contradicts the null hypothesis, suggesting a significant difference or effect in the population.

c. Significance Level (α):

- The probability of rejecting the null hypothesis when it is true. Common values include 0.05 and 0.01.

d. P-value:

- The probability of obtaining results as extreme as the observed results, assuming the null hypothesis is true. A smaller p-value suggests stronger evidence against the null hypothesis.

2. Steps in Hypothesis Testing:

a. Formulate Hypotheses:

- State the null hypothesis (H0) and alternative hypothesis (H1) based on the research question.

b. Choose Significance Level (α):

- Determine the level of significance to determine the critical region. Common choices are 0.05 and 0.01.

c. Collect and Analyze Data:

- Collect sample data and perform statistical tests to calculate the test statistic and p-value.

d. Make a Decision:

- If the p-value is less than the chosen significance level, reject the null hypothesis in favor of the alternative hypothesis. Otherwise, fail to reject the null hypothesis.

e. Draw Conclusions:

- Interpret the results and draw conclusions about the population based on the sample data.

3. Common Statistical Tests:

a. Z-Test:

- Used for testing hypotheses about the mean of a population when the population standard deviation is known.

b. T-Test:

- Used for testing hypotheses about the mean of a population when the population standard deviation is unknown.

from scipy.stats import ttest_1samp, ttest_ind# One-sample t-test

t_stat, p_value = ttest_1samp(sample_data, population_mean)

# Independent two-sample t-test

t_stat, p_value = ttest_ind(group1, group2)

c. Chi-Square Test:

- Used for testing hypotheses about the independence of categorical variables.

from scipy.stats import chi2_contingency# Chi-square test for independence

chi2_stat, p_value, dof, expected = chi2_contingency(observed_data)

d. ANOVA (Analysis of Variance):

- Used for testing hypotheses about the means of multiple groups.

from scipy.stats import f_oneway# One-way ANOVA

f_stat, p_value = f_oneway(group1, group2, group3)

4. Type I and Type II Errors:

a. Type I Error (False Positive):

- Incorrectly rejecting a true null hypothesis.

b. Type II Error (False Negative):

- Failing to reject a false null hypothesis.

Hypothesis testing is a powerful tool in data science for drawing conclusions from sample data and making informed decisions. It helps in validating hypotheses, comparing groups, and assessing the significance of observed effects in the data.

IV. Introduction to Machine Learning

A. Overview of Machine Learning

Machine Learning (ML) is a field of artificial intelligence (AI) that focuses on the development of algorithms and statistical models that enable computers to perform tasks without explicit programming. The goal of machine learning is to allow computers to learn from data, recognize patterns, and make decisions or predictions. Here is an overview of key concepts and components in machine learning:

1. Types of Machine Learning:

a. Supervised Learning:

- Involves training a model on a labeled dataset, where each input is paired with its corresponding output. The model learns to map inputs to outputs and can make predictions on new, unseen data.

Example algorithms: Linear Regression, Decision Trees, Support Vector Machines, Neural Networks.

b. Unsupervised Learning:

- Involves training a model on an unlabeled dataset, where the algorithm learns patterns and structures in the data without explicit output labels. Common tasks include clustering and dimensionality reduction.

Example algorithms: K-Means Clustering, Hierarchical Clustering, Principal Component Analysis (PCA).

c. Reinforcement Learning:

- Focuses on training agents to make decisions in an environment to maximize a reward signal. The agent learns through trial and error, receiving feedback in the form of rewards or penalties.

Example algorithms: Q-Learning, Deep Reinforcement Learning (e.g., Deep Q Networks – DQN).

d. Semi-Supervised and Self-Supervised Learning:

- Combines aspects of supervised and unsupervised learning. In semi-supervised learning, a model is trained on a dataset that contains both labeled and unlabeled examples. Self-supervised learning involves generating labels from the data itself.

Example algorithms: Label Propagation, Contrastive Learning.

2. Key Components:

a. Features and Labels:

- Features are input variables or attributes used to make predictions, while labels are the corresponding outputs or predictions.

b. Training Data and Testing Data:

- The dataset is typically split into training data used to train the model and testing data used to evaluate the model’s performance on unseen examples.

c. Model:

- The mathematical representation or algorithm that is trained on the training data to make predictions on new data.

d. Loss Function:

- A function that measures the difference between the predicted output and the true output (ground truth). The goal is to minimize this difference during training.

e. Optimization Algorithm:

- An algorithm that adjusts the model’s parameters during training to minimize the loss function. Common optimization algorithms include Gradient Descent and its variants.

3. Evaluation Metrics:

a. Accuracy:

- The ratio of correctly predicted instances to the total number of instances.

b. Precision, Recall, and F1-Score:

- Metrics that evaluate the performance of a classification model by considering true positives, false positives, and false negatives.

c. Mean Squared Error (MSE):

- A metric commonly used for regression problems, measuring the average squared difference between predicted and true values.

4. Challenges and Considerations:

a. Overfitting and Underfitting:

- Overfitting occurs when a model learns the training data too well but fails to generalize to new data. Underfitting occurs when a model is too simple to capture the underlying patterns.

b. Bias and Variance Tradeoff:

- Balancing the model’s bias (simplifying assumptions) and variance (sensitivity to small fluctuations) to achieve optimal performance.

c. Data Preprocessing:

- Cleaning and transforming raw data into a format suitable for training machine learning models. This includes handling missing values, scaling features, and encoding categorical variables.

d. Hyperparameter Tuning:

- Adjusting the hyperparameters of a machine learning model to optimize its performance. Hyperparameters are parameters that are set before training and are not learned from the data.

Machine learning applications span a wide range of fields, including image and speech recognition, natural language processing, recommendation systems, autonomous vehicles, and more. The effectiveness of machine learning models depends on the quality of the data, the chosen algorithms, and the careful tuning of parameters. As technology advances, machine learning continues to play a pivotal role in solving complex problems and making intelligent predictions.

B. Types of Machine Learning (Supervised, Unsupervised, Reinforcement Learning)

Machine learning encompasses various approaches to learning patterns from data. The three main types of machine learning are supervised learning, unsupervised learning, and reinforcement learning.

1. Supervised Learning:

In supervised learning, the algorithm is trained on a labeled dataset, meaning that the input data includes both the features (input variables) and the corresponding labels (desired outputs). The goal is for the model to learn a mapping from inputs to outputs so that it can make predictions on new, unseen data.

Key Characteristics:

- The training dataset includes labeled examples.

- The algorithm aims to learn the relationship between inputs and outputs.

- Common tasks include classification and regression.

Examples:

- Classification: Predicting whether an email is spam or not.

- Regression: Predicting the price of a house based on its features.

Algorithms:

- Linear Regression, Decision Trees, Support Vector Machines, Neural Networks.

2. Unsupervised Learning:

In unsupervised learning, the algorithm is trained on an unlabeled dataset, and the goal is to find patterns, structures, or relationships in the data without explicit output labels. Unsupervised learning is often used for clustering, dimensionality reduction, and generative modeling.

Key Characteristics:

- The training dataset does not include labeled outputs.

- The algorithm discovers inherent patterns or structures in the data.

- Common tasks include clustering and dimensionality reduction.

Examples:

- Clustering: Grouping customers based on their purchasing behavior.

- Dimensionality Reduction: Reducing the number of features while preserving important information.

Algorithms:

- K-Means Clustering, Hierarchical Clustering, Principal Component Analysis (PCA), Generative Adversarial Networks (GANs).

3. Reinforcement Learning:

Reinforcement learning involves training agents to make sequential decisions in an environment to maximize a cumulative reward signal. The agent learns through trial and error, receiving feedback in the form of rewards or penalties based on its actions. Reinforcement learning is commonly used in settings where an agent interacts with an environment over time.

Key Characteristics:

- The agent learns by interacting with an environment.

- The agent receives feedback in the form of rewards or penalties.

- The goal is to learn a policy that maximizes cumulative rewards.

Examples:

- Training a computer program to play chess or Go.

- Teaching a robot to navigate a physical environment.

Algorithms:

- Q-Learning, Deep Q Networks (DQN), Policy Gradient Methods.

Understanding the differences between these types of machine learning is crucial for selecting the appropriate approach for a given task. Depending on the nature of the problem and the available data, one may choose between supervised learning for labeled datasets, unsupervised learning for exploration, or reinforcement learning for sequential decision-making problems. Each type has its strengths and applications in different domains.

C. Feature Engineering

Feature engineering is the process of selecting, transforming, or creating features (input variables) to improve the performance of machine learning models. The quality of features significantly impacts a model’s ability to learn patterns from data. Effective feature engineering involves domain knowledge, creativity, and an understanding of the underlying problem. Here are key concepts and techniques in feature engineering:

1. Feature Selection:

a. Filter Methods:

- Select features based on statistical measures, such as correlation or mutual information.

from sklearn.feature_selection import SelectKBest

from sklearn.feature_selection import f_classif# Select top k features based on ANOVA F-statistic

selector = SelectKBest(score_func=f_classif, k=k)

selected_features = selector.fit_transform(X, y)

b. Wrapper Methods:

- Use a machine learning model’s performance as a criterion for feature selection.

from sklearn.feature_selection import RFE

from sklearn.ensemble import RandomForestClassifier# Recursive Feature Elimination (RFE) with Random Forest

model = RandomForestClassifier()

selector = RFE(model, n_features_to_select=k)

selected_features = selector.fit_transform(X, y)

c. Embedded Methods:

- Feature selection is integrated into the model training process.

from sklearn.feature_selection import SelectFromModel

from sklearn.ensemble import RandomForestClassifier# Select features based on feature importance from Random Forest

model = RandomForestClassifier()

selector = SelectFromModel(model)

selected_features = selector.fit_transform(X, y)

2. Handling Missing Data:

a. Imputation:

- Fill missing values with appropriate replacements (mean, median, mode) based on the nature of the data.

from sklearn.impute import SimpleImputer# Impute missing values using the mean

imputer = SimpleImputer(strategy='mean')

X_imputed = imputer.fit_transform(X)

b. Creating Missingness Indicators:

- Introduce binary indicators to mark missing values, allowing the model to learn the impact of missingness.

import pandas as pd# Create indicator columns for missing values

X_with_indicators = pd.concat([X, X.isnull().astype(int)], axis=1)

3. Handling Categorical Variables:

a. One-Hot Encoding:

- Convert categorical variables into binary vectors.

from sklearn.preprocessing import OneHotEncoder# One-hot encode categorical variables

encoder = OneHotEncoder()

X_encoded = encoder.fit_transform(X[['categorical_feature']])

b. Label Encoding:

- Assign integer labels to categories.

from sklearn.preprocessing import LabelEncoder# Label encode categorical variable

encoder = LabelEncoder()

X['categorical_feature_encoded'] = encoder.fit_transform(X['categorical_feature'])

4. Feature Scaling:

a. Standardization:

- Scale features to have zero mean and unit variance.

from sklearn.preprocessing import StandardScaler# Standardize features

scaler = StandardScaler()

X_standardized = scaler.fit_transform(X)

b. Normalization (Min-Max Scaling):

- Scale features to a specific range (e.g., [0, 1]).

from sklearn.preprocessing import MinMaxScaler# Normalize features

scaler = MinMaxScaler()

X_normalized = scaler.fit_transform(X)

5. Creating Interaction Terms:

- Combine two or more features to create new features that capture interactions or relationships.

# Create an interaction term

X['interaction_term'] = X['feature1'] * X['feature2']

6. Handling Date and Time Features:

- Extract relevant information from date and time features (e.g., day of the week, month, hour).

# Extract month and day features from a datetime column

X['month'] = X['datetime_column'].dt.month

X['day_of_week'] = X['datetime_column'].dt.dayofweek

Feature engineering is an iterative process that involves experimenting with different transformations, selecting the most informative features, and refining the model’s input to enhance predictive performance. It plays a crucial role in improving model accuracy, interpretability, and generalization to new data.

D. Model Evaluation and Selection

Model evaluation and selection are critical steps in the machine learning workflow, ensuring that the trained models perform well on new, unseen data. Various metrics and techniques are employed to assess a model’s performance and choose the most suitable model for a given task. Here are key concepts and methods for model evaluation and selection:

1. Splitting the Dataset:

a. Training Set:

- The portion of the dataset used to train the model.

b. Validation Set:

- A separate portion of the dataset used to tune hyperparameters and evaluate model performance during training.

c. Test Set:

- A completely independent dataset not used during training or hyperparameter tuning, reserved for final model evaluation.

from sklearn.model_selection import train_test_split# Split the dataset into training, validation, and test sets

X_train, X_temp, y_train, y_temp = train_test_split(X, y, test_size=0.2, random_state=42)

X_val, X_test, y_val, y_test = train_test_split(X_temp, y_temp, test_size=0.5, random_state=42)

2. Cross-Validation:

a. k-Fold Cross-Validation:

- The dataset is divided into k subsets (folds), and the model is trained and evaluated k times, each time using a different fold as the validation set.

from sklearn.model_selection import cross_val_score# Perform k-fold cross-validation

scores = cross_val_score(model, X_train, y_train, cv=5)

3. Common Evaluation Metrics:

a. Classification Metrics:

- Accuracy: The ratio of correctly predicted instances to the total instances.

- Precision: The ratio of true positives to the sum of true positives and false positives.

- Recall (Sensitivity): The ratio of true positives to the sum of true positives and false negatives.

- F1-Score: The harmonic mean of precision and recall.

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score# Calculate classification metrics

accuracy = accuracy_score(y_true, y_pred)

precision = precision_score(y_true, y_pred)

recall = recall_score(y_true, y_pred)

f1 = f1_score(y_true, y_pred)

b. Regression Metrics:

- Mean Squared Error (MSE): The average squared difference between predicted and true values.

- Mean Absolute Error (MAE): The average absolute difference between predicted and true values.

- R-squared (R2): The proportion of the variance in the dependent variable that is predictable from the independent variables.

from sklearn.metrics import mean_squared_error, mean_absolute_error, r2_score# Calculate regression metrics

mse = mean_squared_error(y_true, y_pred)

mae = mean_absolute_error(y_true, y_pred)

r2 = r2_score(y_true, y_pred)

4. Receiver Operating Characteristic (ROC) Curve and Area Under the Curve (AUC):

- Used for binary classification problems to visualize the trade-off between true positive rate (sensitivity) and false positive rate.

from sklearn.metrics import roc_curve, auc# Calculate ROC curve and AUC

fpr, tpr, thresholds = roc_curve(y_true, y_scores)

roc_auc = auc(fpr, tpr)

5. Model Selection:

a. Grid Search:

- Systematically search through a predefined hyperparameter space to find the best combination of hyperparameters.

from sklearn.model_selection import GridSearchCV# Define hyperparameter grid

param_grid = {'param1': [value1, value2], 'param2': [value3, value4]}

# Perform grid search with cross-validation

grid_search = GridSearchCV(model, param_grid, cv=5)

grid_search.fit(X_train, y_train)

b. Randomized Search:

- Randomly samples hyperparameter combinations from predefined distributions.

from sklearn.model_selection import RandomizedSearchCV# Define hyperparameter distributions

param_distributions = {'param1': [value1, value2], 'param2': [value3, value4]}

# Perform randomized search with cross-validation

randomized_search = RandomizedSearchCV(model, param_distributions, n_iter=10, cv=5)

randomized_search.fit(X_train, y_train)

6. Overfitting and Underfitting:

a. Learning Curves:

- Visualize the performance of a model on the training and validation sets as a function of training set size.

import matplotlib.pyplot as plt

from sklearn.model_selection import learning_curve# Plot learning curves

train_sizes, train_scores, val_scores = learning_curve(model, X, y, cv=5, scoring='accuracy')

plt.plot(train_sizes, train_scores.mean(axis=1), label='Training Score')

plt.plot(train_sizes, val_scores.mean(axis=1), label='Validation Score')

plt.legend()

Model evaluation and selection involve a careful balance between training and validation performance, understanding the trade-offs in model complexity, and choosing appropriate evaluation metrics based on the nature of the task. These steps are crucial to building robust and generalizable machine learning models.

V. Data Science in Bioinformatics

A. Overview of Bioinformatics

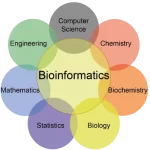

Bioinformatics is a multidisciplinary field that applies computational and statistical techniques to analyze biological data. It plays a crucial role in advancing our understanding of biological processes, genetics, and genomics. Here is an overview of key concepts and applications in bioinformatics:

1. Definition:

Bioinformatics involves the application of computational methods to process, analyze, and interpret biological information, particularly data related to DNA, RNA, proteins, and other biological molecules. It combines elements of biology, computer science, mathematics, and statistics to derive meaningful insights from large and complex biological datasets.

2. Key Areas of Bioinformatics:

a. Genomics:

- Analyzing and interpreting the structure, function, and evolution of genomes. This includes the sequencing and annotation of DNA.

b. Transcriptomics:

- Studying the expression patterns of genes by analyzing RNA transcripts. This provides insights into the dynamic regulation of gene activity.

c. Proteomics:

- Investigating the structure, function, and interactions of proteins. Proteomics involves the study of the entire set of proteins in an organism.

d. Metabolomics:

- Analyzing the complete set of small molecules (metabolites) within a biological sample. Metabolomics provides insights into cellular processes and metabolic pathways.

e. Structural Bioinformatics:

- Predicting and analyzing the three-dimensional structures of biological macromolecules, such as proteins and nucleic acids.

f. Systems Biology:

- Integrating and analyzing data from various biological levels to understand complex biological systems as a whole.

3. Data Sources in Bioinformatics:

a. DNA Sequencing Data:

- High-throughput technologies generate DNA sequences, allowing researchers to study genetic variations, mutations, and the structure of genomes.

b. RNA Sequencing Data:

- Provides information about the transcriptome, helping researchers understand gene expression levels and alternative splicing events.

c. Protein Structure Data:

- Information about the three-dimensional structure of proteins, essential for understanding their function and interactions.

d. Biological Databases:

- Repositories of biological data, including genetic sequences, protein structures, and functional annotations. Examples include GenBank, UniProt, and the Protein Data Bank (PDB).

4. Tools and Techniques in Bioinformatics:

a. Sequence Alignment:

- Comparing and aligning biological sequences (DNA, RNA, proteins) to identify similarities and differences.

b. Homology Modeling:

- Predicting the three-dimensional structure of a protein based on its sequence and the known structures of related proteins.

c. Functional Annotation:

- Assigning biological functions to genes, proteins, or other biomolecules. This involves predicting the roles of genes or proteins based on their sequences or structures.

d. Pathway Analysis:

- Studying and analyzing biological pathways to understand the interactions and relationships among genes, proteins, and metabolites.

e. Machine Learning in Bioinformatics:

- Applying machine learning algorithms to analyze biological data, predict biological functions, and discover patterns in large datasets.

5. Challenges in Bioinformatics:

a. Data Integration:

- Integrating data from multiple sources and platforms to gain a comprehensive understanding of biological systems.

b. Computational Complexity:

- Analyzing and interpreting large-scale biological datasets pose computational challenges, requiring advanced algorithms and high-performance computing.

c. Biological Interpretation:

- Translating computational findings into meaningful biological insights and experimental validations.

d. Ethical and Privacy Considerations:

- Handling sensitive genetic and health-related data while ensuring privacy and ethical use.

6. Applications of Bioinformatics:

a. Disease Research and Diagnosis:

- Identifying genetic factors associated with diseases, predicting disease risk, and developing diagnostic tools.

b. Drug Discovery and Development:

- Analyzing biological data to discover potential drug targets, understand drug interactions, and optimize drug design.

c. Precision Medicine:

- Tailoring medical treatments based on an individual’s genetic makeup for personalized and effective interventions.

d. Agricultural Biotechnology:

- Improving crop yields, developing genetically modified organisms, and studying plant genetics for sustainable agriculture.

e. Comparative Genomics:

- Comparing genomes across species to understand evolutionary relationships and identify conserved genetic elements.

Bioinformatics continues to evolve with advancements in technology, contributing significantly to our understanding of life sciences and playing a pivotal role in various fields, including medicine, agriculture, and environmental science. The integration of data science techniques and computational tools has become indispensable for addressing complex biological questions and driving scientific discoveries in the genomic era.

B. Applications of Data Science in Bioinformatics

Data science plays a crucial role in bioinformatics by leveraging computational and statistical methods to analyze, interpret, and derive insights from biological data. Here are some key applications of data science in bioinformatics:

1. Genomic Data Analysis:

a. DNA Sequencing:

- Analyzing large-scale DNA sequencing data to identify genetic variations, mutations, and structural variations in the genome.

b. Variant Calling:

- Detecting and characterizing genetic variants, such as single nucleotide polymorphisms (SNPs) and insertions/deletions (indels), from genomic data.

c. Genome Assembly:

- Reconstructing the complete genome sequence from short DNA sequencing reads, addressing challenges related to repeat regions and structural variations.

d. Comparative Genomics:

- Comparing genomes across different species to understand evolutionary relationships, identify conserved regions, and study genome evolution.

2. Transcriptomic Data Analysis:

a. RNA Sequencing (RNA-Seq):

- Analyzing transcriptomic data to quantify gene expression levels, identify alternative splicing events, and discover novel transcripts.

b. Differential Gene Expression Analysis:

- Identifying genes that are differentially expressed between different conditions or experimental groups, providing insights into biological processes.

c. Functional Annotation:

- Annotating genes based on their functions, pathways, and biological roles to understand the functional implications of gene expression.

3. Proteomic Data Analysis:

a. Protein Structure Prediction:

- Predicting the three-dimensional structure of proteins using computational methods, contributing to the understanding of protein function.

b. Protein-Protein Interaction Analysis:

- Identifying and characterizing interactions between proteins to understand cellular processes and signaling pathways.

c. Mass Spectrometry Data Analysis:

- Analyzing mass spectrometry data to identify and quantify proteins, peptides, and post-translational modifications.

4. Machine Learning Applications:

a. Disease Prediction and Diagnosis:

- Developing machine learning models to predict disease susceptibility, diagnose diseases based on genetic markers, and stratify patients for personalized treatment.

b. Drug Discovery and Target Identification:

- Applying machine learning algorithms to analyze biological data and predict potential drug targets, accelerating drug discovery and development.

c. Pharmacogenomics:

- Integrating genomic information with drug response data to personalize drug treatments, predicting how individuals will respond to specific medications.

d. Biological Function Prediction:

- Using machine learning to predict the functions of genes, proteins, or other biomolecules based on their sequences, structures, or interactions.

5. Network Analysis:

a. Biological Pathway Analysis:

- Analyzing biological pathways to understand the interactions and relationships among genes, proteins, and metabolites.

b. Gene Regulatory Network Inference:

- Inferring regulatory relationships between genes to understand how genes are controlled and coordinated in cellular processes.

c. Disease-Drug Interaction Networks:

- Constructing networks that represent interactions between diseases and drugs, facilitating the identification of potential drug repurposing opportunities.

6. Personalized Medicine:

a. Genomic Profiling for Treatment Selection:

- Using genomic information to guide the selection of targeted therapies and treatment strategies for individual patients.

b. Risk Prediction Models:

- Developing models to predict an individual’s risk of developing certain diseases based on their genetic and clinical information.

c. Clinical Decision Support Systems:

- Integrating bioinformatics data into clinical decision support systems to assist healthcare professionals in making personalized and evidence-based treatment decisions.

7. Metagenomics:

a. Microbiome Analysis:

- Studying the composition and functional potential of microbial communities in different environments, including the human gut microbiome.

b. Functional Metagenomic Analysis:

- Analyzing metagenomic data to understand the functional capabilities of microbial communities and their impact on host health.

The integration of data science techniques in bioinformatics has transformed biological research by enabling researchers to extract meaningful information from large and complex datasets. These applications contribute to advancements in genomics, personalized medicine, drug discovery, and our overall understanding of biological systems. As technology continues to advance, the role of data science in bioinformatics is expected to grow, further accelerating discoveries in the life sciences.

1. Genomic Data Analysis

Genomic data analysis involves the application of computational and statistical techniques to interpret and extract meaningful insights from DNA sequencing data. This data analysis is crucial for understanding genetic variations, identifying genes associated with diseases, and uncovering the functional elements within the genome. Here are key steps and methods involved in genomic data analysis:

a. DNA Sequencing:

– Definition:

- DNA sequencing is the process of determining the order of nucleotides (adenine, thymine, cytosine, and guanine) in a DNA molecule.

– Methods:

- Next-Generation Sequencing (NGS) technologies, such as Illumina, Ion Torrent, and PacBio, have revolutionized DNA sequencing, enabling high-throughput and cost-effective analysis.

b. Variant Calling:

– Definition:

- Variant calling is the process of identifying genetic variations, such as single nucleotide polymorphisms (SNPs) and insertions/deletions (indels), in a sequenced genome.

– Methods:

- Variant calling algorithms compare sequenced reads to a reference genome, identifying differences that may represent genetic variations.

# Example using GATK (Genome Analysis Toolkit)

java -jar GenomeAnalysisTK.jar -T HaplotypeCaller -R reference.fasta -I input.bam -o output.vcf

c. Genome Assembly:

– Definition:

- Genome assembly involves reconstructing the complete DNA sequence of an organism from short DNA sequencing reads.

– Methods:

- De novo assembly methods build a genome without using a reference sequence, while reference-guided assembly aligns reads to a known reference.

# Example using SPAdes for de novo assembly

spades.py -o output_directory -1 forward_reads.fastq -2 reverse_reads.fastq

d. Comparative Genomics:

– Definition:

- Comparative genomics compares genomes from different species to identify similarities, differences, and evolutionary relationships.

– Methods:

- Tools like BLAST (Basic Local Alignment Search Tool) are used to compare DNA sequences and identify homologous regions between genomes.

# Example using BLAST

blastn -query query_sequence.fasta -subject reference_genome.fasta -out result.txt

e. Functional Annotation:

– Definition:

- Functional annotation involves assigning biological functions to genes and other genomic elements.

– Methods:

- Tools like ANNOVAR and Ensembl provide functional annotations, including information about gene function, protein domains, and related pathways.

# Example using ANNOVAR

annotate_variation.pl -downdb -buildver hg38 -webfrom annovar refGene humandb/

f. Machine Learning in Genomic Data Analysis:

– Applications:

- Machine learning is increasingly used for tasks such as predicting gene functions, classifying disease-associated variants, and identifying regulatory elements in the genome.

– Methods:

- Random Forest, Support Vector Machines, and Neural Networks are among the machine learning algorithms applied to genomic data.

# Example using scikit-learn for classification

from sklearn.ensemble import RandomForestClassifier

model = RandomForestClassifier()

model.fit(X_train, y_train)

g. Visualization and Interpretation:

– Tools:

- Visualization tools like IGV (Integrative Genomics Viewer) and genome browsers help researchers visualize and interpret genomic data, including variants, coverage, and annotations.

# Example using IGV

java -jar igv.jar -g reference_genome.fasta -b aligned_reads.bam

h. Integration with Other Omics Data:

– Definition:

- Integrating genomic data with other omics data, such as transcriptomics and proteomics, provides a more comprehensive understanding of biological processes.

– Methods:

- Integration tools and platforms, like Galaxy and Bioconductor, facilitate the analysis of multi-omics datasets.

Genomic data analysis is a dynamic and evolving field that leverages computational and statistical approaches to decipher the information embedded in DNA sequences. The integration of these analyses contributes to advancements in genetics, personalized medicine, and our understanding of the genetic basis of diseases.

2. Protein Structure Prediction

Protein structure prediction is a computational process that aims to determine the three-dimensional arrangement of atoms in a protein molecule. Understanding the spatial arrangement of amino acids in a protein is crucial for deciphering its function, interactions with other molecules, and implications in various biological processes. Here are the key steps and methods involved in protein structure prediction:

a. Primary Structure:

– Definition:

- The primary structure of a protein refers to the linear sequence of amino acids that constitute the protein chain. It is determined by the sequence of nucleotides in the corresponding gene.

– Methods:

- Experimental techniques such as DNA sequencing or mass spectrometry are used to determine the amino acid sequence.

b. Secondary Structure Prediction:

– Definition:

- Secondary structure refers to local folding patterns within a protein chain, including alpha helices, beta sheets, and loops.

– Methods:

- Computational tools, like DSSP (Define Secondary Structure of Proteins) and PSIPRED, predict secondary structure based on amino acid sequences.

# Example using PSIPRED

psipred input_sequence.fasta

c. Tertiary Structure Prediction:

– Definition:

- Tertiary structure is the overall three-dimensional arrangement of a protein’s secondary structure elements.

– Methods:

- Comparative modeling (homology modeling) and ab initio (de novo) modeling are common approaches. Comparative modeling relies on the availability of a homologous protein structure, while ab initio modeling predicts the structure without relying on templates.

# Example using MODELLER for comparative modeling

from modeller import *

env = environ()

env.io.atom_files_directory = ['path/to/templates']

a = automodel(env, alnfile='alignment.ali', knowns='template', sequence='target')

a.starting_model = 1

a.ending_model = 5

a.make()

# Example using Rosetta for ab initio modeling

rosetta_scripts.linuxgccrelease -s input_sequence.fasta -parser:protocol abinitio.xml -nstruct 100

d. Quaternary Structure Prediction:

– Definition:

- Quaternary structure refers to the arrangement of multiple protein subunits in a complex, particularly relevant for proteins that function as complexes.

– Methods:

- Docking simulations and experimental techniques like X-ray crystallography or cryo-electron microscopy are used to determine the quaternary structure.

# Example using HADDOCK for protein-protein docking

haddock2.4

e. Validation and Model Assessment:

– Methods:

- Various metrics, including Ramachandran plots, MolProbity scores, and root mean square deviation (RMSD), are employed to assess the quality of predicted protein structures.

# Example using MolProbity for structure validation

phenix.molprobity model.pdb

f. Visualization and Analysis:

– Tools:

- Visualization tools such as PyMOL, Chimera, and VMD allow researchers to visualize and analyze protein structures.

# Example using PyMOL for visualization

pymol input_structure.pdb

g. Integration with Experimental Data:

– Definition:

- Integrating computational predictions with experimental data, such as NMR spectroscopy or X-ray crystallography, improves the accuracy of protein structure predictions.

– Methods:

- Software like CNS (Crystallography and NMR System) is used to refine models based on experimental data.

# Example using CNS for structure refinement

cns input.inp

h. Machine Learning in Protein Structure Prediction:

– Applications:

- Machine learning techniques, including deep learning, are increasingly used for aspects of protein structure prediction, such as contact prediction and structure refinement.

– Methods:

- Tools like AlphaFold, developed by DeepMind, utilize deep learning to predict accurate protein structures.

# Example using AlphaFold for structure prediction

# (Note: AlphaFold is a complex model developed by DeepMind; usage may differ)

Protein structure prediction is a challenging task, and various computational methods and tools are continually evolving to improve accuracy and efficiency. Integrating different computational approaches and leveraging experimental data enhance the reliability of predicted protein structures, contributing to advancements in structural biology, drug discovery, and our understanding of complex biological systems.

3. Drug Discovery and Development

Drug discovery and development is a complex and multi-stage process that involves the identification, design, testing, and optimization of potential therapeutic compounds to address specific diseases. Data science and computational methods play a crucial role in various stages of this process, enhancing efficiency and reducing the time and costs associated with bringing a new drug to market. Here are the key steps and applications of data science in drug discovery and development:

a. Target Identification and Validation:

– Definition:

- Identifying and validating molecular targets (proteins, genes, or other biological molecules) associated with a particular disease.

– Data Science Applications: