Machine Learning Applications in Genomic Data Analysis

April 22, 2024Table of Contents

Course Description:

This course explores the intersection of machine learning and genomics, focusing on how machine learning algorithms can be applied to analyze and interpret genomic data. Students will learn about the fundamentals of machine learning, its applications in genomics, and gain hands-on experience with analyzing real genomic datasets.

Course Objectives:

- Understand the basics of machine learning and its relevance to genomic data analysis.

- Learn about different machine learning algorithms and their applications in genomics.

- Gain practical skills in using machine learning tools for genomic data analysis.

- Apply machine learning techniques to solve real-world genomics problems.

Introduction to Machine Learning

Basics of machine learning (supervised, unsupervised, and reinforcement learning)

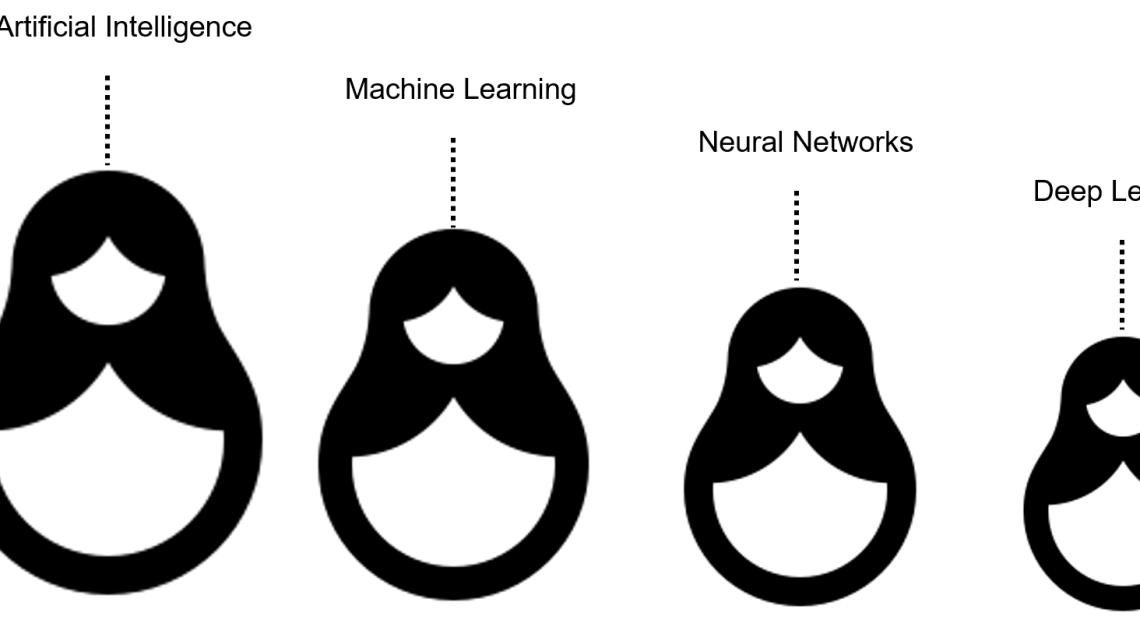

Machine learning is a branch of artificial intelligence (AI) that focuses on developing algorithms and techniques that enable computers to learn from and make predictions or decisions based on data. There are three main types of machine learning: supervised learning, unsupervised learning, and reinforcement learning.

- Supervised Learning:

- Supervised learning involves training a model on a labeled dataset, where each example is paired with the correct output or label.

- The goal of supervised learning is to learn a mapping from input features to the correct output labels, so that the model can make accurate predictions on new, unseen data.

- Examples of supervised learning algorithms include linear regression, logistic regression, decision trees, random forests, support vector machines (SVMs), and neural networks.

- Unsupervised Learning:

- Unsupervised learning involves training a model on an unlabeled dataset, where the model must find patterns or structure in the data without explicit guidance.

- The goal of unsupervised learning is to learn the underlying structure of the data, such as clustering similar data points together or reducing the dimensionality of the data.

- Examples of unsupervised learning algorithms include k-means clustering, hierarchical clustering, principal component analysis (PCA), and autoencoders.

- Reinforcement Learning:

- Reinforcement learning involves training a model to make sequences of decisions in an environment to achieve a goal.

- The model learns through trial and error, receiving feedback in the form of rewards or penalties for its actions.

- The goal of reinforcement learning is to learn a policy that maximizes the cumulative reward over time.

- Examples of reinforcement learning algorithms include Q-learning, deep Q-networks (DQN), and policy gradient methods.

Each type of machine learning has its strengths and weaknesses, and the choice of algorithm depends on the specific task and the nature of the data. Supervised learning is often used for tasks such as classification and regression, where the goal is to predict an output based on input features. Unsupervised learning is used for tasks such as clustering and dimensionality reduction, where the goal is to discover patterns or structure in the data. Reinforcement learning is used for tasks where the model must learn to make sequential decisions in a dynamic environment, such as game playing or robotic control.

Overview of machine learning in genomics

Machine learning has become an integral tool in genomics, offering powerful methods for analyzing large-scale genomic data and extracting meaningful insights. Here is an overview of machine learning applications in genomics:

- Genomic sequence analysis:

- Machine learning algorithms can analyze DNA and RNA sequences to predict functional elements, such as coding regions, regulatory elements, and non-coding RNAs.

- Sequence-based models can also be used to predict the effects of genetic variants on gene function and disease risk.

- Gene expression analysis:

- Machine learning can analyze gene expression data, such as RNA-seq and microarray data, to identify patterns of gene expression associated with different biological conditions or disease states.

- Clustering algorithms can group genes with similar expression profiles, while classification algorithms can predict the biological function or disease subtype based on gene expression patterns.

- Variant calling and interpretation:

- Machine learning algorithms can improve the accuracy of variant calling from sequencing data by distinguishing true genetic variants from sequencing errors.

- Machine learning can also predict the functional effects of genetic variants, such as their impact on protein structure or function.

- Drug discovery and personalized medicine:

- Machine learning is used in drug discovery to predict the interactions between drugs and biological molecules, such as proteins or nucleic acids.

- Personalized medicine approaches use machine learning to analyze genomic data and predict the most effective treatments for individual patients based on their genetic makeup.

- Evolutionary genomics:

- Machine learning can analyze genomic data from multiple species to study evolutionary relationships and identify genes or genomic regions under positive selection.

- Phylogenetic tree construction and comparative genomics are areas where machine learning is widely used.

- Structural genomics:

- Machine learning algorithms can predict the three-dimensional structure of proteins based on their amino acid sequences.

- This is useful for understanding protein function and designing new drugs that target specific proteins.

- Epigenomics:

- Machine learning is used to analyze epigenomic data, such as DNA methylation and histone modification patterns, to study gene regulation and disease mechanisms.

Overall, machine learning has revolutionized genomics by enabling the analysis of large and complex genomic datasets, leading to new discoveries and advancements in understanding the genetic basis of health and disease.

Genomic Data Types and Sources

Introduction to genomic data (DNA sequences, gene expression data, etc.)

Genomic data refers to the vast amount of information stored in the genetic material of an organism, including DNA sequences, gene expression data, and other types of molecular data. Here is an introduction to some key types of genomic data:

- DNA sequences:

- DNA (deoxyribonucleic acid) is the molecule that carries the genetic instructions for the development, functioning, growth, and reproduction of all known living organisms.

- DNA sequences are composed of four nucleotide bases: adenine (A), cytosine (C), guanine (G), and thymine (T).

- The order of these bases in a DNA sequence encodes the genetic information necessary for the synthesis of proteins and the regulation of gene expression.

- Gene expression data:

- Gene expression refers to the process by which the information encoded in a gene is used to synthesize a functional gene product, such as a protein or RNA molecule.

- Gene expression data measures the levels of gene expression in a cell, tissue, or organism under specific conditions.

- Gene expression data can be obtained using techniques such as RNA sequencing (RNA-seq) or microarray analysis.

- Genetic variants:

- Genetic variants are differences in the DNA sequence that can occur between individuals in a population.

- Common types of genetic variants include single nucleotide polymorphisms (SNPs), which involve a change in a single nucleotide base, and insertions or deletions (indels), which involve the addition or removal of nucleotides in the DNA sequence.

- Epigenomic data:

- Epigenomics refers to the study of changes in gene expression or cellular phenotype that are not due to changes in the underlying DNA sequence.

- Epigenomic data includes information on DNA methylation, histone modifications, and other epigenetic marks that regulate gene expression and cellular function.

- Proteomics data:

- Proteomics is the large-scale study of proteins, including their structures, functions, and interactions.

- Proteomics data includes information on the abundance, localization, and post-translational modifications of proteins in a cell or organism.

- Metagenomic data:

- Metagenomics is the study of genetic material recovered directly from environmental samples, such as soil or water, which contains a mixture of genomes from multiple organisms.

- Metagenomic data can be used to study microbial communities and their genetic diversity, as well as their functional potential.

These are just a few examples of the types of genomic data that are used in biological research to understand the genetic basis of health and disease, evolutionary relationships, and the functioning of biological systems at the molecular level.

Common genomic data sources (public databases, experimental data)

There are several common sources of genomic data, including public databases and experimental data repositories, where researchers can access and contribute genomic information. Here are some of the most widely used genomic data sources:

- GenBank: GenBank is a comprehensive public database maintained by the National Center for Biotechnology Information (NCBI) that contains annotated DNA sequences from a wide range of organisms. It includes sequences submitted by researchers as well as those generated by large-scale sequencing projects.

- Ensembl: Ensembl is a genome browser and database that provides access to a wide range of genomic data for vertebrate and model organism genomes. It includes genome assemblies, gene annotations, and comparative genomics data.

- UCSC Genome Browser: The UCSC Genome Browser is a widely used tool for visualizing and analyzing genomic data. It provides access to a large collection of genome assemblies and annotations, as well as tools for comparative genomics and data integration.

- The Cancer Genome Atlas (TCGA): TCGA is a comprehensive resource that provides genomic, transcriptomic, and clinical data for a large number of cancer samples. It has been instrumental in advancing our understanding of cancer biology and identifying potential therapeutic targets.

- Gene Expression Omnibus (GEO): GEO is a public repository maintained by the NCBI that archives and distributes gene expression data from a variety of experimental platforms. It includes data from both microarray and RNA-seq experiments.

- European Nucleotide Archive (ENA): ENA is a comprehensive public database that archives and distributes nucleotide sequence data, including DNA sequences, RNA sequences, and raw sequencing data. It is part of the European Bioinformatics Institute (EBI).

- Sequence Read Archive (SRA): SRA is a public archive maintained by the NCBI that stores raw sequencing data from a variety of high-throughput sequencing platforms. It provides a valuable resource for researchers to access and analyze raw sequencing data from a wide range of studies.

- 1000 Genomes Project: The 1000 Genomes Project is an international collaboration that aims to build a comprehensive map of human genetic variation. The project has generated whole-genome sequencing data for thousands of individuals from diverse populations, providing valuable insights into human genetic diversity.

These are just a few examples of the many public databases and repositories that provide access to genomic data. These resources play a crucial role in advancing genomic research by providing researchers with access to a wealth of data for analysis and interpretation.

Machine Learning Algorithms for Genomic Data Analysis

Supervised learning algorithms (decision trees, random forests, support vector machines)

Supervised learning algorithms are used in machine learning to learn the mapping between input features and output labels from labeled training data. Here are three common supervised learning algorithms:

- Decision Trees:

- Decision trees are a simple and interpretable model that recursively partitions the feature space into regions, based on the value of the input features.

- Each internal node of the tree represents a decision based on a feature, and each leaf node represents a class label or a regression value.

- Decision trees can handle both numerical and categorical data and are robust to outliers and missing values.

- However, decision trees can be prone to overfitting, especially with complex datasets.

- Random Forests:

- Random forests are an ensemble learning method that combines multiple decision trees to improve performance and reduce overfitting.

- Each tree in the random forest is trained on a random subset of the training data and a random subset of the features.

- Random forests are robust to overfitting and can handle high-dimensional data.

- They are widely used for classification and regression tasks and are known for their high accuracy and robustness.

- Support Vector Machines (SVM):

- Support Vector Machines are a powerful supervised learning algorithm used for classification and regression tasks.

- SVMs find the hyperplane that best separates the classes in the feature space, with the largest margin between the classes.

- SVMs can handle high-dimensional data and are effective in cases where the data is not linearly separable by transforming the data into a higher-dimensional space using a kernel function.

- SVMs are less interpretable compared to decision trees but can provide high accuracy and generalization performance.

These supervised learning algorithms are widely used in various applications, including bioinformatics, healthcare, finance, and many others, for tasks such as classification, regression, and anomaly detection.

Unsupervised learning algorithms (clustering, dimensionality reduction)

Unsupervised learning algorithms are used to find patterns or structure in unlabeled data. Two common types of unsupervised learning algorithms are clustering algorithms and dimensionality reduction techniques:

- Clustering Algorithms:

- Clustering algorithms group similar data points together based on their features, without any prior knowledge of class labels.

- K-means clustering is a popular clustering algorithm that partitions the data into k clusters, where each data point belongs to the cluster with the nearest mean.

- Hierarchical clustering builds a hierarchy of clusters by recursively merging or splitting clusters based on a similarity measure.

- Density-based clustering algorithms, such as DBSCAN, group together closely packed data points and identify outliers as noise.

- Dimensionality Reduction Techniques:

- Dimensionality reduction techniques reduce the number of features in a dataset while preserving important information.

- Principal Component Analysis (PCA) is a widely used dimensionality reduction technique that transforms the data into a new coordinate system such that the greatest variance lies on the first axis (principal component), the second greatest variance on the second axis, and so on.

- t-Distributed Stochastic Neighbor Embedding (t-SNE) is another technique used for visualizing high-dimensional data by reducing it to two or three dimensions while preserving the local structure of the data.

Unsupervised learning algorithms are used in various applications, such as customer segmentation, anomaly detection, and exploratory data analysis. They can help uncover hidden patterns in data and provide valuable insights into the underlying structure of the data.

Deep learning approaches (convolutional neural networks, recurrent neural networks)

Deep learning approaches are a class of machine learning techniques that use artificial neural networks with multiple layers to extract and learn features from complex data. Two common types of deep learning approaches are convolutional neural networks (CNNs) and recurrent neural networks (RNNs):

- Convolutional Neural Networks (CNNs):

- CNNs are primarily used for processing and analyzing visual data, such as images and videos.

- They are designed to automatically and adaptively learn spatial hierarchies of features from the input data.

- CNNs consist of multiple layers, including convolutional layers, pooling layers, and fully connected layers.

- Convolutional layers apply filters (kernels) to the input data to extract features, while pooling layers downsample the feature maps to reduce computation.

- CNNs have been highly successful in tasks such as image classification, object detection, and image segmentation.

- Recurrent Neural Networks (RNNs):

- RNNs are designed to model sequential data, such as time series data or natural language text.

- Unlike feedforward neural networks, RNNs have connections that form directed cycles, allowing information to persist over time.

- RNNs can process inputs of variable length and are capable of capturing long-range dependencies in sequential data.

- However, traditional RNNs suffer from the vanishing gradient problem, which limits their ability to learn long-range dependencies.

- Variants of RNNs, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), have been developed to address the vanishing gradient problem and improve the learning of long-term dependencies.

Deep learning approaches, including CNNs and RNNs, have achieved remarkable success in various applications, such as image and speech recognition, natural language processing, and medical image analysis. They have significantly advanced the field of artificial intelligence and continue to be a focus of research and development in machine learning.

Applications of Machine Learning in Genomics

Genomic sequence analysis (sequence alignment, variant calling)

Genomic sequence analysis is a key area of bioinformatics that involves analyzing DNA and RNA sequences to understand their structure, function, and evolution. Two important tasks in genomic sequence analysis are sequence alignment and variant calling:

- Sequence Alignment:

- Sequence alignment is the process of comparing two or more sequences to identify similarities and differences between them.

- Pairwise sequence alignment is used to align two sequences, while multiple sequence alignment is used to align three or more sequences.

- Alignment algorithms, such as Needleman-Wunsch and Smith-Waterman for pairwise alignment, and ClustalW and MAFFT for multiple alignment, are used to find the best alignment based on a scoring system.

- Sequence alignment is used in various applications, such as identifying conserved regions in sequences, predicting protein structure, and evolutionary analysis.

- Variant Calling:

- Variant calling is the process of identifying genetic variations, such as single nucleotide polymorphisms (SNPs), insertions, deletions, and structural variants, in a genome or a set of genomes.

- Variant calling involves comparing sequencing reads to a reference genome to identify differences between the sample and the reference.

- Variant calling algorithms, such as GATK, Samtools, and FreeBayes, use statistical models to differentiate true genetic variants from sequencing errors and artifacts.

- Variant calling is important for understanding genetic diversity, identifying disease-causing mutations, and personalized medicine.

Sequence alignment and variant calling are fundamental tasks in genomic research and are essential for interpreting genomic data. They provide valuable insights into the genetic makeup of organisms, the genetic basis of diseases, and the evolution of species.