Introduction to Bioinformatics for Computer Science Students

October 8, 2023Introduction to Bioinformatics for Computer Science Students

This course is designed to provide you with a comprehensive understanding of bioinformatics, starting from the basics of biology and gradually transitioning into computational methods and practical applications. Along the way, you will learn essential programming skills in Perl and Python, which are commonly used in bioinformatics. By the end of this course, you should be well-equipped to apply your computer science knowledge to solve biological problems using bioinformatics tools and techniques.

Module 1: Introduction to Biology and Molecular Biology

Lesson 1: Basics of Biology

Cellular structure and function are fundamental concepts in biology that explain the organization and activities of living organisms at the cellular level. Cells are the basic units of life and perform various functions that are essential for the survival and functioning of an organism. Here’s an overview of cellular structure and function:

Cellular Structure:

- Cell Membrane (Plasma Membrane): The cell membrane is a semi-permeable lipid bilayer that encloses the cell and separates its internal contents from the external environment. It controls the passage of molecules in and out of the cell.

- Cytoplasm: The cytoplasm is the jelly-like substance inside the cell membrane where various cellular organelles are suspended. It contains water, ions, and molecules necessary for cell metabolism.

- Nucleus: The nucleus is the control center of the cell and contains genetic material (DNA) organized into chromosomes. It regulates cell activities, including growth, replication, and protein synthesis.

- Endoplasmic Reticulum (ER): The ER is a network of membranes involved in protein and lipid synthesis. Rough ER has ribosomes on its surface and is involved in protein synthesis, while smooth ER is involved in lipid metabolism.

- Ribosomes: Ribosomes are the cellular machinery responsible for protein synthesis. They can be found free in the cytoplasm or attached to the rough ER.

- Golgi Apparatus: The Golgi apparatus processes, modifies, and packages proteins and lipids produced by the ER. It prepares these molecules for transport within or outside the cell.

- Mitochondria: Mitochondria are often referred to as the “powerhouses” of the cell because they produce adenosine triphosphate (ATP), the cell’s energy currency, through cellular respiration.

- Lysosomes: Lysosomes contain enzymes that break down waste materials, cellular debris, and foreign substances. They play a crucial role in cellular digestion and recycling.

- Vacuoles: Vacuoles are membrane-bound sacs that store water, nutrients, or waste products. In plant cells, a large central vacuole helps maintain turgor pressure and store nutrients.

- Cytoskeleton: The cytoskeleton is a network of protein filaments (microtubules, microfilaments, and intermediate filaments) that provides structural support, facilitates cell movement, and aids in intracellular transport.

Cellular Functions:

- Energy Production: Cells generate energy (ATP) through various processes, such as glycolysis and cellular respiration in mitochondria.

- DNA Replication and Protein Synthesis: Cells replicate their DNA and synthesize proteins using the genetic information stored in the nucleus.

- Transport: The cell membrane regulates the passage of substances in and out of the cell, ensuring a controlled internal environment.

- Metabolism: Cells carry out metabolic reactions, including catabolic (breakdown) and anabolic (synthesis) processes to maintain homeostasis.

- Response to Stimuli: Cells can respond to external and internal stimuli, allowing organisms to adapt to changes in their environment.

- Cell Division: Cells divide through processes like mitosis and meiosis, allowing for growth, repair, and reproduction.

- Waste Elimination: Lysosomes and vacuoles help remove waste materials from the cell.

- Cellular Communication: Cells communicate with each other through chemical signals, enabling coordination within tissues and organ systems.

Understanding cellular structure and function is essential for comprehending the biology of living organisms and how they carry out the processes necessary for life. These processes occur in a highly organized and coordinated manner within cells, contributing to the overall functioning of an organism.

Cell Types:

Cells can be classified into two main categories based on their structural and functional characteristics:

- Prokaryotic Cells: Prokaryotic cells lack a true nucleus and membrane-bound organelles. They are typically smaller and simpler in structure than eukaryotic cells. Bacteria and archaea are examples of prokaryotic organisms.

- Eukaryotic Cells: Eukaryotic cells have a true nucleus that encloses their genetic material and possess membrane-bound organelles. Eukaryotes include a wide range of organisms, such as plants, animals, fungi, and protists.

Specialized Cell Types:

Within multicellular organisms, different cell types have unique structures and functions tailored to their roles in specific tissues and organs. Here are a few examples:

- Neurons: Neurons are specialized cells of the nervous system responsible for transmitting electrical signals. They have long extensions called axons and dendrites that facilitate communication between cells.

- Muscle Cells: Muscle cells, or muscle fibers, are specialized for contraction. They contain bundles of myofilaments and are responsible for movement in animals.

- Red Blood Cells (Erythrocytes): Red blood cells lack a nucleus and are filled with hemoglobin, a protein that carries oxygen from the lungs to body tissues.

- White Blood Cells (Leukocytes): White blood cells are essential components of the immune system, defending the body against infections and foreign invaders.

- Adipocytes: Adipocytes are fat cells responsible for storing energy in the form of lipids.

Cells within an organism can vary greatly in terms of size, shape, and function. This diversity is a result of differentiation, where cells undergo specific changes in structure and function during development to serve particular roles within the organism.

Cellular Regulation:

Cells maintain homeostasis, a stable internal environment, through a variety of regulatory mechanisms. This includes feedback loops, signaling pathways, and gene expression regulation. For example, cells can respond to changes in temperature, pH, and nutrient levels to ensure optimal functioning.

Cell Division:

Cell division is a crucial process that allows for growth, repair, and reproduction. In multicellular organisms, it ensures that the body can replace damaged or dying cells and is responsible for the development from a single fertilized egg into a complex organism.

There is also the concept of apoptosis, programmed cell death, which is a controlled process by which cells die to maintain tissue integrity and eliminate damaged or unnecessary cells.

Cellular Dysfunction:

When cellular processes are disrupted or malfunction, it can lead to various diseases. For example, cancer is often the result of uncontrolled cell division, while genetic disorders can arise from mutations in cellular DNA.

In summary, cellular structure and function are fundamental aspects of biology. Understanding how cells are organized and the roles they play in maintaining life processes is essential for comprehending the biology of organisms and the mechanisms underlying health and disease. Cellular biology is a dynamic field that continues to advance our understanding of life at its most basic level.

Cellular Communication:

Cells communicate with each other through a variety of mechanisms, including chemical signaling. Signaling molecules such as hormones, neurotransmitters, and cytokines are released by one cell and received by specific receptors on the surface of target cells. This communication is crucial for coordinating cellular activities within tissues and organs, regulating growth, and responding to changes in the environment.

Stem Cells:

Stem cells are unique cells with the ability to differentiate into various cell types. They play a vital role in development, tissue repair, and regeneration. There are two primary types of stem cells:

- Embryonic Stem Cells: These are pluripotent stem cells found in early embryos and have the potential to become any cell type in the body.

- Adult (Somatic) Stem Cells: These are found in various tissues throughout an organism’s life and are more limited in their differentiation potential compared to embryonic stem cells. They primarily contribute to tissue repair and maintenance.

Cellular Adaptation:

Cells can adapt to changing conditions and stressors. For example, when exposed to low oxygen levels (hypoxia), cells can trigger the production of hypoxia-inducible factors (HIFs) to enhance oxygen delivery and survival.

Cellular Aging:

Cells undergo aging processes over time, which can result in reduced function and increased vulnerability to damage. Telomere shortening, oxidative stress, and DNA damage are factors associated with cellular aging. Understanding these processes is important in the context of aging-related diseases and longevity research.

Advances in microscopy, genomics, and biotechnology have revolutionized our ability to study cellular structure and function. Techniques like CRISPR-Cas9 gene editing, single-cell RNA sequencing, and super-resolution microscopy have opened up new avenues for research and therapeutic development.

Medical Applications:

Cellular biology has numerous practical applications in medicine, including the development of targeted therapies for diseases like cancer, stem cell-based regenerative medicine, and the study of infectious diseases at the cellular level.

The study of cellular biology also raises ethical questions, particularly in areas like cloning, genetic modification, and stem cell research. These areas require careful ethical and societal considerations.

In conclusion, cellular structure and function are intricate and dynamic processes that underlie all life forms. They involve a complex interplay of molecules, organelles, and regulatory mechanisms, and they are central to our understanding of biology, health, and disease. Continued research in cellular biology continues to deepen our knowledge of life’s fundamental processes and holds promise for innovative medical treatments and scientific breakthroughs in the future.

DNA, RNA, and protein

DNA, RNA, and proteins are three crucial biomolecules that play essential roles in the functioning of cells and living organisms. Each of these molecules has distinct structures and functions:

1. DNA (Deoxyribonucleic Acid):

- Structure: DNA is a double-stranded, helical molecule made up of nucleotides. Each nucleotide consists of a sugar (deoxyribose), a phosphate group, and one of four nitrogenous bases: adenine (A), cytosine (C), guanine (G), or thymine (T). The two strands of DNA are held together by hydrogen bonds between complementary base pairs (A-T and C-G).

- Function: DNA serves as the genetic blueprint or code for an organism. It contains the instructions for the synthesis of proteins and the regulation of cellular processes. DNA is found in the nucleus of eukaryotic cells and the nucleoid region of prokaryotic cells.

- Replication: DNA replication is the process by which a cell copies its DNA before cell division. This ensures that each daughter cell receives an identical set of genetic information.

2. RNA (Ribonucleic Acid):

- Structure: RNA is a single-stranded molecule similar in structure to DNA, but it uses ribose sugar instead of deoxyribose and includes the bases adenine (A), cytosine (C), guanine (G), and uracil (U) instead of thymine (T). RNA comes in various forms, including messenger RNA (mRNA), transfer RNA (tRNA), and ribosomal RNA (rRNA).

- Function: RNA plays several crucial roles in gene expression and protein synthesis.

- mRNA carries the genetic information from DNA to the ribosome for protein synthesis.

- tRNA brings amino acids to the ribosome during protein synthesis.

- rRNA is a component of ribosomes, the cellular machinery responsible for protein synthesis.

- Transcription: Transcription is the process by which an RNA molecule is synthesized from a DNA template. During transcription, a specific segment of DNA is transcribed into a complementary mRNA molecule.

3. Proteins:

- Structure: Proteins are large, complex molecules made up of amino acids. There are 20 different amino acids that can be combined in various sequences to form proteins. The sequence of amino acids determines the protein’s unique three-dimensional structure, which is critical for its function.

- Function: Proteins are incredibly diverse in their functions and are involved in nearly every aspect of cellular life. Some key functions of proteins include:

- Enzymes: Catalysts that speed up chemical reactions.

- Structural Proteins: Provide support and maintain cell shape.

- Transport Proteins: Facilitate the movement of molecules across cell membranes.

- Antibodies: Part of the immune system’s defense against pathogens.

- Hormones: Regulate various physiological processes.

- Receptors: Bind to signaling molecules and transmit signals within cells.

- Translation: Translation is the process by which the information encoded in mRNA is used to build a specific protein. It occurs at ribosomes, where tRNA molecules bring the appropriate amino acids to the growing polypeptide chain based on the mRNA sequence.

Together, DNA, RNA, and proteins are intimately linked in the central dogma of molecular biology. DNA contains the genetic information, which is transcribed into mRNA. This mRNA is then translated into proteins, which carry out the majority of cellular functions, making these biomolecules essential components of life.

The genetic code is the set of rules that determines how nucleotide triplets, called codons, in mRNA sequences correspond to specific amino acids during protein synthesis. Each codon consists of three nucleotide bases, and there are 64 possible codons. Some codons code for specific amino acids, while others serve as start or stop signals for protein synthesis. The genetic code is nearly universal, meaning the same codons typically code for the same amino acids in all organisms.

DNA Replication:

DNA replication is a highly accurate process that occurs before cell division. During replication, the double-stranded DNA molecule unwinds, and each strand serves as a template for the synthesis of a new complementary strand. The result is two identical DNA molecules, each containing one original strand and one newly synthesized strand. This process ensures that genetic information is faithfully passed on to daughter cells.

Central Dogma:

The central dogma of molecular biology describes the flow of genetic information within a biological system. It consists of three main processes:

- DNA Replication: The copying of DNA to produce two identical DNA molecules.

- Transcription: The synthesis of RNA from a DNA template, specifically mRNA.

- Translation: The conversion of mRNA into a functional protein.

The central dogma emphasizes the unidirectional flow of genetic information from DNA to RNA to protein, with rare exceptions such as reverse transcription in retroviruses.

Regulation of Gene Expression:

Cells can regulate which genes are expressed and to what extent. This regulation is critical for controlling the production of specific proteins in response to changing conditions or developmental requirements. Various regulatory mechanisms, including transcription factors, epigenetic modifications, and microRNAs, play roles in gene expression control.

Mutations:

Mutations are changes in the DNA sequence that can occur naturally or due to external factors like radiation or chemicals. Mutations can have various effects, from being harmless to causing genetic diseases or contributing to the evolution of species. Mutations provide the raw material for genetic diversity.

Protein Folding and Function:

A protein’s structure, determined by its amino acid sequence, is closely linked to its function. Proteins must fold into specific three-dimensional shapes to perform their roles effectively. Misfolded proteins can lead to diseases such as Alzheimer’s and prion diseases.

Enzymes:

Enzymes are a type of protein that acts as biological catalysts, speeding up chemical reactions in cells. Enzymes play essential roles in metabolism, digestion, and many other cellular processes.

Genomics and Proteomics:

Genomics is the study of an organism’s entire DNA, while proteomics focuses on the entire complement of proteins in an organism. These fields aim to understand how genes and proteins work together to control cellular processes and contribute to health and disease.

Understanding the interactions and functions of DNA, RNA, and proteins is fundamental to biology and has far-reaching implications for fields like medicine, genetics, biotechnology, and evolutionary biology. The study of these biomolecules continues to advance our knowledge of life and our ability to manipulate and harness biological processes for various applications.

DNA Repair:

Cells have mechanisms for repairing damaged DNA. DNA can be damaged by various factors, including radiation, chemicals, and errors in DNA replication. DNA repair mechanisms help maintain the integrity of the genetic code and prevent mutations that could lead to diseases like cancer.

Epigenetics refers to changes in gene expression that do not involve alterations to the DNA sequence itself. Epigenetic modifications, such as DNA methylation and histone modification, can influence whether a gene is turned on or off. Epigenetic changes are critical in development, and they can be influenced by environmental factors, diet, and lifestyle.

RNA Processing:

In eukaryotic cells, mRNA undergoes several modifications before it is ready for translation. These modifications include the addition of a 5′ cap and a poly-A tail, as well as the removal of introns (non-coding regions) through a process called splicing. These modifications enhance the stability and translatability of the mRNA.

Post-translational Modifications:

Proteins can undergo post-translational modifications after they are synthesized. These modifications include phosphorylation, glycosylation, acetylation, and others. They can alter a protein’s activity, stability, or localization within the cell.

DNA serves as the basis for genetic variation among individuals in a population. Genetic diversity arises from mutations, genetic recombination, and other processes. This diversity is essential for species’ adaptation to changing environments and for the evolution of new traits over time.

Advances in molecular biology have enabled genetic engineering, which involves the deliberate manipulation of DNA to modify genes or create new genetic sequences. Genetic engineering has applications in agriculture, biotechnology, and medicine, including the production of genetically modified crops, gene therapy, and the development of recombinant proteins.

Protein Folding Diseases:

Misfolding of proteins is associated with various diseases, such as Alzheimer’s, Parkinson’s, and Huntington’s disease. The aggregation of misfolded proteins can lead to the formation of toxic protein aggregates that disrupt cellular function.

Proteomics and Systems Biology:

Proteomics studies aim to identify and characterize all the proteins in a given biological system. It plays a critical role in understanding complex cellular processes and identifying potential drug targets. Systems biology integrates data from genomics, proteomics, and other disciplines to model and understand the behavior of biological systems as a whole.

Evolutionary Significance:

DNA, RNA, and proteins have evolved over billions of years, and their structures and functions have been shaped by natural selection. The study of these molecules provides insights into the evolutionary history of life on Earth.

In summary, DNA, RNA, and proteins are the molecular building blocks of life. Their structures and functions are interconnected and are central to understanding the biology of living organisms. Advances in molecular biology continue to reveal the intricate details of how these molecules work together to drive the processes of life, and they have practical applications in fields ranging from medicine to biotechnology.

Genetic inheritance

Genetic inheritance refers to the process by which traits or characteristics are passed from one generation to the next through the transmission of genetic information. This genetic information is carried in the form of DNA, which contains the instructions for building and maintaining an organism. Genetic inheritance plays a fundamental role in determining an individual’s traits, including physical characteristics, susceptibility to diseases, and various other aspects of biology.

Key concepts and principles related to genetic inheritance include:

- Genes: Genes are segments of DNA that code for specific proteins or functional RNA molecules. Genes are the units of heredity and carry the instructions for traits.

- Alleles: Alleles are different versions of a gene that can exist at a specific locus (location) on a chromosome. Alleles can be dominant or recessive, influencing how a trait is expressed in an individual.

- Chromosomes: Chromosomes are long strands of DNA that are organized into pairs. In humans, there are 23 pairs of chromosomes, including one pair of sex chromosomes (XX in females, XY in males).

- Genotype: The genotype refers to an individual’s genetic makeup, which includes the combination of alleles they inherit from their parents.

- Phenotype: The phenotype is the observable expression of an individual’s genotype, including their physical and biochemical traits.

- Mendelian Inheritance: Mendelian inheritance refers to the principles of genetic inheritance first described by Gregor Mendel in the 19th century. These principles include the laws of segregation and independent assortment, which explain how alleles are inherited and how traits are passed from parents to offspring.

- Dominance and Recessiveness: In cases where an individual has two different alleles for a gene (heterozygous), one allele may be dominant, and its trait will be expressed in the phenotype, while the other, recessive allele’s trait will be masked.

- Genetic Crosses: Genetic crosses, such as Punnett squares, are used to predict the potential genotypes and phenotypes of offspring when the genotypes of two parents are known.

- Incomplete Dominance: In some cases, neither allele is completely dominant, resulting in an intermediate phenotype in heterozygous individuals.

- Codominance: In codominance, both alleles are fully expressed in the phenotype of heterozygous individuals. An example is the ABO blood group system.

- Sex-Linked Inheritance: Some traits are carried on the sex chromosomes (X and Y), leading to sex-linked inheritance patterns. This can result in different inheritance patterns for males and females.

- Polygenic Inheritance: Many traits are influenced by multiple genes and exhibit a continuous range of phenotypes. Examples include height, skin color, and susceptibility to complex diseases like diabetes.

- Environmental Factors: While genetics plays a significant role in determining traits, environmental factors can also influence how genes are expressed. This is known as gene-environment interaction.

- Mutations: Genetic mutations can lead to changes in the DNA sequence and may result in new traits or genetic disorders. Some mutations are inherited, while others occur spontaneously.

Genetic inheritance is a complex and fascinating area of biology that helps us understand how traits are passed from one generation to the next. It has practical applications in fields such as medical genetics, agriculture, and evolutionary biology, and it continues to be a subject of active research and discovery.

- Pedigree Analysis: Pedigree charts are used to study the inheritance of traits or genetic disorders within families over multiple generations. They can help identify patterns of inheritance, such as autosomal dominant, autosomal recessive, X-linked, or mitochondrial inheritance.

- Genetic Disorders: Genetic inheritance is central to understanding the causes of genetic disorders. These disorders result from mutations or abnormalities in an individual’s DNA. Examples include cystic fibrosis, sickle cell anemia, Huntington’s disease, and Down syndrome.

- Carrier Status: In some genetic disorders, individuals who inherit one normal allele and one disease-causing allele are carriers but do not exhibit the disorder’s symptoms. However, carriers can pass the disease allele to their offspring.

- Genetic Counseling: Genetic counselors provide information and support to individuals and families who may be at risk of inheriting genetic disorders. They help individuals make informed decisions about family planning and healthcare.

- Pharmacogenetics: Understanding genetic variation is essential in personalized medicine. Pharmacogenetics examines how an individual’s genetic makeup influences their response to medications. This information can guide treatment decisions and dosage adjustments.

- Population Genetics: Population genetics studies the distribution of genetic variation within and among populations. It explores topics such as genetic drift, gene flow, and natural selection, providing insights into the evolution and adaptation of species.

- Genome-wide Association Studies (GWAS): GWAS are used to identify genetic variations associated with complex traits or diseases in large populations. These studies have been instrumental in uncovering genetic factors behind conditions like diabetes, heart disease, and cancer.

- Epigenetic Inheritance: In addition to genetic inheritance, epigenetic modifications can be inherited across generations. Epigenetic changes can affect gene expression and be influenced by environmental factors.

- Mitochondrial DNA (mtDNA): Mitochondria have their own DNA, separate from nuclear DNA, and are inherited only from the mother. Mutations in mitochondrial DNA can lead to mitochondrial diseases that affect energy production in cells.

- Genome Editing: Advancements in genome editing technologies like CRISPR-Cas9 offer the potential to modify specific genes, correct genetic mutations, or introduce desired genetic changes. This has applications in both research and potential therapeutic interventions.

- Ethical and Legal Considerations: As our understanding of genetic inheritance and the capabilities of genetic technologies grow, ethical and legal issues related to genetic testing, genetic privacy, and gene editing become increasingly important topics of discussion.

Understanding genetic inheritance is not only crucial for scientific research and medical applications but also for making informed decisions about family planning, healthcare, and genetic counseling. It is a dynamic field that continues to evolve as new discoveries are made about the complexities of genetics and its role in health and disease.

Lesson 2: Molecular Biology Fundamentals

DNA replication, transcription, and translation

DNA replication, transcription, and translation are fundamental processes in molecular biology that collectively enable the flow of genetic information from DNA to protein. These processes are essential for cell growth, development, and the synthesis of functional proteins. Here’s an overview of each process:

1. DNA Replication:

- Definition: DNA replication is the process by which a cell makes an identical copy of its DNA, ensuring that genetic information is faithfully passed from one generation to the next during cell division.

- Key Features:

- DNA replication occurs during the S phase of the cell cycle.

- It involves the separation of the two DNA strands and the synthesis of complementary strands.

- DNA replication is semi-conservative, meaning each new DNA molecule consists of one parental (old) strand and one newly synthesized (new) strand.

- Steps of DNA Replication:

- Initiation: DNA unwinds at the origin of replication, forming a replication bubble. Enzymes called helicases help in separating the DNA strands.

- Elongation: DNA polymerase enzymes add complementary nucleotides to the template strands. DNA synthesis proceeds in the 5′ to 3′ direction, and a leading strand and a lagging strand are formed due to the antiparallel nature of DNA.

- Termination: DNA replication is terminated when the replication forks meet or reach the end of the DNA molecule.

2. Transcription:

- Definition: Transcription is the process by which a complementary RNA molecule (mRNA) is synthesized from a DNA template. This mRNA carries the genetic information from DNA to the ribosome for protein synthesis.

- Key Features:

- Transcription takes place in the nucleus of eukaryotic cells and in the cytoplasm of prokaryotic cells.

- The enzyme RNA polymerase is responsible for catalyzing the synthesis of mRNA.

- Transcription includes three main stages: initiation, elongation, and termination.

- Steps of Transcription:

- Initiation: RNA polymerase binds to a specific region on DNA called the promoter. This marks the starting point for transcription.

- Elongation: RNA polymerase moves along the DNA template, adding complementary ribonucleotides to the growing mRNA strand.

- Termination: Transcription concludes when RNA polymerase reaches a termination signal in the DNA, leading to the release of the newly synthesized mRNA molecule.

3. Translation:

- Definition: Translation is the process by which the genetic code carried by mRNA is converted into a specific sequence of amino acids, forming a protein. Translation occurs in the ribosome, a cellular organelle.

- Key Features:

- Translation requires transfer RNA (tRNA) molecules, each of which carries a specific amino acid.

- The genetic code is read in groups of three nucleotides called codons, each coding for a specific amino acid.

- The ribosome acts as the molecular machine that facilitates the coupling of amino acids in the correct order to form a polypeptide chain.

- Steps of Translation:

- Initiation: The small and large ribosomal subunits assemble on the mRNA molecule at the start codon (AUG). The first tRNA carrying methionine binds to the start codon.

- Elongation: The ribosome moves along the mRNA, matching each codon with the appropriate tRNA, which delivers the corresponding amino acid. Peptide bonds form between adjacent amino acids, creating a growing polypeptide chain.

- Termination: Translation concludes when a stop codon (UAA, UAG, or UGA) is reached. No tRNA molecule matches these codons. Instead, a release factor binds, causing the ribosome to release the completed polypeptide chain.

Together, DNA replication, transcription, and translation are central processes that link the genetic information encoded in DNA to the synthesis of proteins, which are the primary effectors of cellular function and structure. This flow of genetic information is often referred to as the central dogma of molecular biology.

Central dogma of molecular biology

The central dogma of molecular biology is a fundamental framework that describes the flow of genetic information within a biological system. It outlines the sequential processes through which genetic information stored in DNA is used to produce functional proteins, which are the primary players in the structure and function of living organisms. The central dogma was proposed by Francis Crick in 1958 and represents a foundational concept in molecular biology. It consists of three main steps:

1. DNA Replication:

- DNA replication is the process by which a cell creates an identical copy of its DNA. This occurs during the cell cycle, specifically in the S phase. The key features of DNA replication include:

- The original DNA molecule unwinds and separates into two complementary strands.

- Each separated strand serves as a template for the synthesis of a new complementary strand.

- DNA polymerases, along with other enzymes, facilitate the addition of nucleotide building blocks to create the new strands.

- The result is two identical DNA molecules, each consisting of one original (parental) strand and one newly synthesized (daughter) strand.

2. Transcription:

- Transcription is the process by which a complementary RNA molecule (mRNA) is synthesized from a DNA template. The main points regarding transcription are as follows:

- Transcription takes place in the nucleus of eukaryotic cells and in the cytoplasm of prokaryotic cells.

- RNA polymerase is the enzyme responsible for catalyzing the synthesis of mRNA.

- During transcription, the DNA molecule unwinds locally, and the RNA polymerase adds complementary ribonucleotides to create an mRNA molecule.

- mRNA carries the genetic information from DNA to the ribosome for protein synthesis.

3. Translation:

- Translation is the process by which the genetic code carried by mRNA is converted into a specific sequence of amino acids, ultimately forming a protein. The key aspects of translation include:

- Translation occurs in the ribosome, a cellular organelle.

- Transfer RNA (tRNA) molecules, each carrying a specific amino acid, help in the translation process.

- The genetic code is read in groups of three nucleotides called codons, with each codon coding for a specific amino acid.

- The ribosome moves along the mRNA, matching each codon with the appropriate tRNA, which delivers the corresponding amino acid.

- Peptide bonds form between adjacent amino acids, creating a growing polypeptide chain.

- Translation concludes when a stop codon is reached, causing the ribosome to release the completed polypeptide chain.

The central dogma emphasizes the unidirectional flow of genetic information, proceeding from DNA replication to transcription and, finally, translation. It is important to note that while this flow of information is generally linear, there are exceptions and complexities, such as reverse transcription in retroviruses and the role of regulatory elements, non-coding RNAs, and epigenetic modifications in gene expression. Nevertheless, the central dogma provides a fundamental framework for understanding how genetic information is used to build and maintain the structures and functions of living organisms.

4. Reverse Transcription:

While the central dogma typically describes the flow of genetic information from DNA to RNA to protein, some exceptions exist. One of the most notable exceptions is reverse transcription, which occurs in retroviruses like HIV. In reverse transcription, the viral RNA genome is used as a template to synthesize complementary DNA (cDNA) by the enzyme reverse transcriptase. This cDNA can then be integrated into the host cell’s genome.

5. Genetic Regulation:

The central dogma doesn’t fully encompass the complex regulation of gene expression. Gene regulation involves mechanisms that control when and to what extent specific genes are transcribed into mRNA and subsequently translated into proteins. Regulatory elements, such as enhancers and repressors, play essential roles in gene regulation, allowing cells to respond to environmental cues and maintain homeostasis.

6. Non-Coding RNAs:

While the central dogma focuses on protein-coding genes, a significant portion of the genome does not code for proteins. Non-coding RNAs (ncRNAs), such as microRNAs (miRNAs) and long non-coding RNAs (lncRNAs), have been identified and found to play crucial roles in gene regulation, RNA processing, and various cellular processes. They do not follow the traditional path of mRNA translation to proteins.

7. Epigenetics:

Epigenetic modifications, such as DNA methylation and histone modifications, can influence gene expression without changing the DNA sequence itself. Epigenetic changes can be inherited and can have a profound impact on gene regulation, development, and disease.

8. Post-Translational Modifications:

Proteins synthesized during translation can undergo post-translational modifications (PTMs) that affect their function, localization, and stability. PTMs include phosphorylation, glycosylation, acetylation, and many others, contributing to the complexity of protein regulation.

9. Feedback Loops and Regulatory Networks:

Cells employ complex regulatory networks involving feedback loops, signaling pathways, and interactions between multiple genes and proteins. These networks allow for dynamic responses to environmental changes and coordination of cellular processes.

In summary, while the central dogma provides a foundational framework for understanding the flow of genetic information, the biology of gene expression is far more intricate. Cellular processes are governed by a network of interactions involving DNA, RNA, proteins, and regulatory elements, all of which work together to enable the precise control of gene expression and the maintenance of cellular functions. Advances in molecular biology continue to reveal the depth and complexity of these processes, providing insights into health, disease, and evolution.

10. Genetic Variation:

Genetic variation arises due to mutations, which are changes in the DNA sequence. Mutations can occur during DNA replication, exposure to mutagenic agents, or as a result of natural genetic processes. These variations can lead to diversity among individuals and contribute to evolution.

11. Evolutionary Implications:

The central dogma has significant implications for our understanding of evolutionary biology. The accumulation of genetic changes, including mutations, over time can lead to the evolution of new traits and species. Natural selection acts on variations in genes, and changes in DNA sequences play a critical role in the adaptation and divergence of species.

12. Applications in Biotechnology:

The central dogma has practical applications in biotechnology and genetic engineering. Scientists can manipulate DNA, RNA, and protein molecules to create genetically modified organisms (GMOs), produce recombinant proteins for medical purposes, and design gene therapies to treat genetic diseases.

13. RNA World Hypothesis:

The RNA World hypothesis suggests that RNA, rather than DNA, may have played a central role in early life forms. This theory proposes that RNA molecules could have acted both as genetic information carriers and as catalysts (ribozymes), bridging the gap between genetic information storage and catalysis in the prebiotic world.

14. Synthetic Biology:

Advancements in molecular biology and gene editing technologies have given rise to the field of synthetic biology. Scientists can now design and construct artificial DNA sequences, synthetic genes, and engineered organisms to perform specific tasks, such as producing biofuels or synthesizing pharmaceuticals.

15. Ethical Considerations:

The ability to manipulate genetic information has raised ethical questions about the potential consequences of genetic engineering, gene editing, and cloning. Ethical debates surrounding the central dogma include issues related to genetic privacy, informed consent, and the potential misuse of biotechnology.

16. Medical Implications:

Understanding the central dogma is critical in the context of medical research and healthcare. Genetic information is used in diagnostics, personalized medicine, and the development of treatments for genetic disorders and diseases with a genetic component.

17. Environmental and Ecological Impact:

Genetic information plays a role in understanding the genetics of populations and species. It can be used in conservation efforts, studying the impact of environmental changes on genetic diversity, and assessing the genetic health of populations.

In conclusion, the central dogma of molecular biology provides a foundational framework for understanding how genetic information is stored, replicated, transcribed into RNA, and translated into proteins. While it serves as a fundamental model, it is complemented by the recognition of the complexity of gene regulation, epigenetic modifications, and the broader network of molecular interactions that govern biological processes. Advances in molecular biology continue to expand our knowledge and capabilities in understanding and manipulating genetic information.

Genetic code

The genetic code is a set of rules that dictates how the information contained in DNA or RNA is translated into the sequence of amino acids in a protein. It serves as a universal language for all living organisms on Earth, allowing the genetic instructions encoded in DNA or RNA to be decoded and expressed as functional proteins. Here are the key features and principles of the genetic code:

1. Triplet Codons:

- The genetic code is read in sequences of three nucleotides, known as codons. Each codon corresponds to a specific amino acid or a signal for the start or termination of protein synthesis.

2. Redundancy (Degeneracy):

- There are 64 possible codons (4 bases, A, U, G, and C, taken three at a time), but only 20 amino acids are encoded. This means that most amino acids are represented by multiple codons. For example, the amino acid leucine can be encoded by six different codons.

3. Start and Stop Codons:

- AUG (adenine-uracil-guanine) serves as the start codon, indicating the beginning of protein synthesis. It also codes for the amino acid methionine.

- Three codons (UAA, UAG, and UGA) are stop codons or termination codons. When the ribosome encounters one of these codons during translation, protein synthesis ceases.

4. Unambiguity:

- Each codon corresponds to a specific amino acid, and the code is unambiguous. For example, the codon AUG codes for methionine, and only methionine.

5. Universality:

- The genetic code is nearly universal, meaning that the same codons generally code for the same amino acids in all organisms, from bacteria to humans. This universality is one of the key pieces of evidence for the common ancestry of all life on Earth.

6. Non-Overlapping:

- Codons are read in a non-overlapping manner, meaning that each nucleotide in an mRNA sequence is part of only one codon. This ensures that there is no ambiguity in the reading of the genetic code.

7. Reading Frame:

- The genetic code is read in a specific reading frame, with each codon following the one before it. Shifting the reading frame by adding or deleting nucleotides can completely change the protein sequence.

8. Codon- tRNA Matching:

- Transfer RNA (tRNA) molecules are responsible for bringing the correct amino acid to the ribosome during translation. Each tRNA has an anticodon region that is complementary to a specific codon, ensuring that the correct amino acid is added to the growing polypeptide chain.

9. Wobble Hypothesis:

- Due to the redundancy of the genetic code, the third position (3′ end) of the codon often exhibits flexibility in base pairing. This is known as the “wobble” position and allows some tRNAs to recognize multiple codons with different nucleotides at the third position.

The genetic code is a remarkable system that allows the information stored in DNA or RNA to be converted into the sequence of amino acids in proteins, which are the workhorses of cellular function. Understanding the genetic code is fundamental to molecular biology, genetics, and biotechnology and has profound implications for our understanding of biology and the design of genetic engineering techniques.

0. Variations in the Genetic Code:

While the genetic code is highly conserved across most organisms, some variations and exceptions exist. For example:

- In some mitochondria and certain microorganisms, variations in the genetic code have been observed. Certain codons may code for different amino acids in these cases.

- Selenocysteine and pyrrolysine are amino acids incorporated into proteins through codons that are exceptions to the standard amino acid-codon pairing.

11. The Universal Nature of the Code:

The universality of the genetic code suggests a common ancestry of all life forms on Earth. The fact that the same codons are used to code for the same amino acids across species reinforces the idea of a shared evolutionary history.

12. Evolutionary Perspective:

The genetic code itself is believed to have evolved over time. It is thought that early life forms may have used simpler versions of the code, and as life became more complex, the code evolved to accommodate the diverse array of amino acids and functions observed in modern organisms.

13. Codon Bias:

Codon usage bias refers to the preference for certain codons over others when multiple codons code for the same amino acid. Organisms may show variations in their codon usage preferences, which can be influenced by factors such as mutational biases, tRNA availability, and translational efficiency.

14. Genetic Code and Disease:

Mutations in the genetic code can lead to genetic disorders or diseases. For example, point mutations that result in the replacement of one amino acid with another in a crucial protein can lead to diseases like sickle cell anemia or cystic fibrosis.

15. Synthetic Biology and Genetic Code Expansion:

In synthetic biology, researchers have expanded the genetic code by engineering organisms to incorporate synthetic amino acids. This has applications in the development of novel proteins with unique properties or functions.

16. Genetic Code and Biotechnology:

The understanding of the genetic code is fundamental to biotechnology applications such as gene cloning, genetic engineering, and recombinant DNA technology. Scientists can manipulate the genetic code to express desired proteins in host organisms.

17. Genetic Code in Drug Development:

Pharmaceutical research often involves understanding the genetic code to identify potential drug targets or to design therapies that target specific genes or proteins involved in disease.

In summary, the genetic code is a remarkable and highly conserved system that underlies the translation of genetic information into proteins, enabling the functions of all living organisms. Its universality, exceptions, and variations are subjects of ongoing research and have profound implications for fields such as evolutionary biology, biotechnology, medicine, and synthetic biology.

Module 2: Basics of Bioinformatics

Lesson 3: What is Bioinformatics?

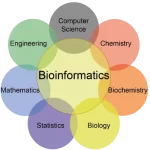

Computers play a crucial and multifaceted role in the field of biology, revolutionizing research, data analysis, modeling, and more. The intersection of computer science and biology, often referred to as computational biology or bioinformatics, has become increasingly important for advancing our understanding of biological processes and solving complex biological problems. Here are some key roles that computers play in biology:

1. Genomic Sequencing and Analysis:

- High-throughput sequencing technologies generate vast amounts of genomic data. Computers are essential for processing and analyzing these data, identifying genes, regulatory elements, and variations in DNA sequences.

2. Protein Structure Prediction:

- Computers are used to predict the three-dimensional structures of proteins, which is crucial for understanding their functions and interactions. This field is known as structural bioinformatics.

3. Drug Discovery and Design:

- Computational methods are employed in virtual screening and molecular docking to identify potential drug candidates and assess their interactions with biological targets.

- Computational tools help analyze gene expression data, enabling researchers to understand the role of genes in various biological processes and diseases.

5. Evolutionary Biology:

- Phylogenetic analysis, molecular clock estimation, and comparative genomics rely on computational algorithms to study the evolutionary relationships among species.

6. Systems Biology:

- Computers are used to model and simulate complex biological systems, such as metabolic pathways and regulatory networks, to gain insights into their behavior and responses to perturbations.

- Computational methods, including molecular dynamics simulations and bioinformatics tools, are employed to study the structure and function of biomolecules.

8. Next-Generation Sequencing Data Analysis:

- Handling and analyzing data from next-generation sequencing (NGS) platforms require sophisticated computational tools for tasks like variant calling, transcriptomics, and epigenomics.

9. Phylogenetics and Phylogenomics:

- Computational algorithms are used to construct phylogenetic trees and analyze genomic data to understand the evolutionary history of species.

10. Personalized Medicine: – Computers assist in analyzing patient-specific genomic data to tailor medical treatments, predict disease risks, and identify personalized therapeutic strategies.

11. Metagenomics: – Metagenomics studies complex microbial communities by analyzing DNA sequences from environmental samples. Computational tools help identify and characterize the organisms present.

12. Big Data Handling: – Biological research generates enormous amounts of data. Computers are essential for storing, managing, and analyzing this data efficiently.

13. Bioinformatics Databases: – Numerous bioinformatics databases and resources, such as GenBank and the Protein Data Bank (PDB), are accessible online, facilitating research and data retrieval.

14. Data Visualization: – Computers are used to create visual representations of biological data, aiding in the interpretation of complex datasets.

15. Artificial Intelligence and Machine Learning: – AI and machine learning techniques are applied to various biological problems, such as image analysis, predicting protein structures, and identifying patterns in genomics data.

16. Robotics: – Laboratory robots are controlled by computer systems for tasks like high-throughput screening, sample preparation, and liquid handling.

17. Computational Ecology: – Computers help model and simulate ecological systems, contributing to the study of biodiversity, population dynamics, and ecosystem behavior.

Computational biology and bioinformatics continue to grow in importance as biological research becomes increasingly data-driven and multidisciplinary. The integration of computational methods with experimental biology accelerates discoveries and has practical applications in medicine, biotechnology, conservation, and beyond.

Data types in bioinformatics

In bioinformatics, a wide range of data types are encountered and analyzed to gain insights into biological processes, genetics, genomics, and more. These data types are diverse and can be complex, often requiring specialized tools and techniques for their management and analysis. Here are some common data types in bioinformatics:

- DNA sequences: Represent the genetic code of an organism.

- RNA sequences: Include mRNA, tRNA, rRNA, and non-coding RNAs.

- Protein sequences: Represent the amino acid sequences of proteins.

2. Genomic Data:

- Whole-genome sequences: The complete set of an organism’s DNA.

- Exome sequences: Sequences of exons, the protein-coding regions of genes.

- Variant data: Information on single nucleotide polymorphisms (SNPs), insertions, deletions, and structural variations.

3. Transcriptomic Data:

- mRNA expression profiles: Measure gene expression levels in different tissues or conditions.

- Alternative splicing data: Identify different mRNA isoforms generated from a single gene.

- Non-coding RNA data: Explore the expression and function of non-coding RNAs, such as microRNAs and long non-coding RNAs (lncRNAs).

4. Proteomic Data:

- Mass spectrometry data: Identify and quantify proteins in biological samples.

- Protein-protein interaction data: Explore the interactions among proteins in cellular networks.

- Structural data: Information on protein structures, including 3D coordinates.

- Gene ontology terms: Describe the functions and processes associated with genes and proteins.

- Pathway data: Represent biological pathways, such as metabolic pathways or signaling cascades.

6. Epigenomic Data:

- DNA methylation data: Reveal patterns of DNA methylation that can influence gene expression.

- Histone modification data: Describe epigenetic modifications on histone proteins.

- Chromatin accessibility data: Indicate regions of the genome that are open or closed for transcription.

7. Metagenomic Data:

- Sequences from mixed microbial communities in environmental samples, such as soil or the human gut.

- X-ray crystallography data: Determine the 3D structures of biological macromolecules.

- NMR spectroscopy data: Obtain structural information about proteins and nucleic acids.

9. Pharmacological and Drug Data:

- Information about chemical compounds, drug targets, drug interactions, and pharmacokinetics.

10. Evolutionary Data: – Phylogenetic trees and sequence alignments to study evolutionary relationships. – Homology and orthology data to identify genes with shared ancestry.

11. Next-Generation Sequencing Data: – Data generated from technologies like RNA-seq, ChIP-seq, and ATAC-seq for various functional genomics studies.

12. Biological Images: – Microscopy images, including fluorescence microscopy, electron microscopy, and confocal microscopy, for visualizing cellular and subcellular structures.

13. Clinical and Health Data: – Patient data, electronic health records, and clinical trial data for personalized medicine and epidemiological studies.

14. Literature and Text Data: – Text-mining tools to extract information from scientific literature and databases.

15. Metabolic Data: – Metabolomics data, which includes the measurement of metabolites in biological samples.

16. Biological Networks: – Network data representing interactions among genes, proteins, metabolites, or other biological entities.

Handling and analyzing these diverse data types in bioinformatics often require specialized software tools, algorithms, databases, and computational resources. Bioinformaticians and researchers use these resources to process, integrate, visualize, and interpret biological data to answer important biological questions and gain insights into the complexity of living organisms.

Importance in modern science

Bioinformatics plays a pivotal and increasingly essential role in modern science, particularly in biological and biomedical research. Its importance can be summarized in several key areas:

- Genomic and Genetic Research: Bioinformatics tools enable the analysis of massive genomic and genetic datasets, helping researchers identify genes, regulatory elements, variations, and associations with diseases. This information is crucial for understanding the genetic basis of traits, diseases, and evolution.

- Personalized Medicine: Bioinformatics contributes to the development of personalized medicine by analyzing individual genomic data to tailor medical treatments, predict disease risks, and optimize therapeutic strategies based on a patient’s genetic profile.

- Drug Discovery: Bioinformatics aids in drug discovery and design by identifying potential drug candidates, predicting their interactions with biological targets, and optimizing drug properties. This accelerates the drug development process and reduces costs.

- Functional Genomics: Functional genomics studies, enabled by bioinformatics, help decipher the roles of genes, non-coding RNAs, and regulatory elements in various biological processes, offering insights into disease mechanisms and potential therapeutic targets.

- Proteomics and Structural Biology: Bioinformatics plays a vital role in analyzing protein sequences, predicting their structures, and understanding protein-protein interactions. This knowledge is crucial for drug design and understanding protein function.

- Disease Research and Diagnostics: Bioinformatics tools aid in identifying disease-associated genes, biomarkers, and potential therapeutic targets. They also facilitate the development of diagnostic tests and disease risk assessments.

- Phylogenetics and Evolution: Bioinformatics is indispensable for reconstructing evolutionary relationships, studying biodiversity, and tracing the origins of species. It helps us understand the evolution of life on Earth.

- Biomedical Imaging: Image analysis and processing in bioinformatics contribute to fields such as medical imaging, microscopy, and radiomics. These techniques enhance disease diagnosis and monitoring.

- Metagenomics and Microbiome Research: Bioinformatics is vital in analyzing complex microbial communities, studying the human microbiome, and understanding the roles of microorganisms in health and disease.

- Systems Biology: Bioinformatics enables the modeling and simulation of complex biological systems, allowing researchers to investigate cellular processes, metabolic pathways, and regulatory networks.

- Big Data Management: With the increasing volume of biological data, bioinformatics is essential for managing, storing, and retrieving vast datasets efficiently.

- Environmental and Ecological Studies: Bioinformatics contributes to environmental research by analyzing DNA sequences from environmental samples, tracking changes in ecosystems, and studying the effects of environmental factors on biodiversity.

- Biotechnology and Synthetic Biology: Bioinformatics plays a crucial role in designing and engineering biological systems for biotechnology applications, including the production of biofuels, pharmaceuticals, and bioproducts.

- Ethical and Legal Considerations: Bioinformatics addresses ethical and legal challenges related to data privacy, informed consent, and responsible use of genetic and health data.

- Scientific Collaboration: Bioinformatics promotes collaboration among scientists from diverse disciplines, including biology, computer science, mathematics, and medicine, facilitating a holistic approach to complex biological questions.

In summary, bioinformatics is integral to advancing our understanding of life sciences, accelerating scientific discoveries, improving healthcare, and addressing critical challenges in biology and medicine. It empowers researchers to harness the wealth of biological data available today and use it to unravel the mysteries of life and disease.

Lesson 4: Tools and Databases

Introduction to biological databases

Biological databases are organized collections of biological data that are designed to be easily accessed, searched, and analyzed by researchers, scientists, and the broader scientific community. These databases store a wide range of biological information, including genetic sequences, protein structures, functional annotations, and experimental data. They are essential tools in modern biological research, enabling scientists to retrieve and analyze vast amounts of biological information efficiently. Here is an introduction to biological databases:

Types of Biological Databases:

- Sequence Databases:

- Structure Databases:

- Structural databases store three-dimensional structures of biological macromolecules, such as proteins and nucleic acids. The Protein Data Bank (PDB) is a prominent example.

- Functional Annotation Databases:

- These databases provide functional information about genes, proteins, and other biomolecules. Examples include Gene Ontology (GO) and Kyoto Encyclopedia of Genes and Genomes (KEGG).

- Expression Databases:

- Expression databases contain data on gene expression levels under different conditions or in various tissues. The Gene Expression Omnibus (GEO) is a widely used resource.

- Phylogenetic Databases:

- Metabolic Pathway Databases:

- These databases provide information about metabolic pathways, reactions, and compounds. KEGG and Reactome are examples.

- Genomic Variation Databases:

- Genomic variation databases store information about genetic variations, including single nucleotide polymorphisms (SNPs) and structural variations. The Single Nucleotide Polymorphism Database (dbSNP) is one such resource.

- Proteomics Databases:

- Proteomics databases contain information about proteins, their functions, post-translational modifications, and interactions. UniProt and InterPro are examples.

- Genome Databases:

- These databases store complete genome sequences of various organisms, along with annotations. The Ensembl Genome Browser and NCBI Genome are widely used genome resources.

Features and Functions:

- Data Retrieval: Users can search and retrieve specific data by using keywords, accession numbers, or various search parameters.

- Data Integration: Many databases link related data, allowing users to navigate between different types of biological information.

- Data Visualization: Some databases offer tools for visualizing and interpreting biological data, including sequence alignments, phylogenetic trees, and protein structures.

- Analysis Tools: Many databases provide analysis tools and software to perform various bioinformatics tasks, such as sequence alignment, motif searching, and pathway analysis.

- Data Download: Users can often download datasets for further analysis in their own software environments.

- Curation: Databases are typically curated by teams of experts who ensure data accuracy, consistency, and relevance.

- Cross-Referencing: Databases often include cross-references to other related databases, facilitating comprehensive research.

- Community Contributions: Some databases allow researchers to contribute their own data, annotations, or corrections to improve data quality.

Importance:

Biological databases are critical for various aspects of biological and biomedical research, including genetics, genomics, structural biology, drug discovery, and disease research. They enable researchers to:

- Access a vast amount of biological information.

- Conduct comparative genomics and evolutionary studies.

- Predict protein structures and functions.

- Investigate gene expression patterns.

- Identify potential drug targets.

- Understand disease mechanisms.

- Discover and validate biomarkers.

In summary, biological databases are indispensable resources that empower researchers to harness the wealth of biological data available today, facilitating advancements in our understanding of biology and the development of new treatments and therapies.

Accessing and querying databases

Accessing and querying biological databases is a fundamental skill for researchers and scientists in the fields of biology, bioinformatics, and related disciplines. Here are the general steps involved in accessing and querying databases:

1. Identify the Relevant Database:

- Determine which biological database contains the data you need. This will depend on your research question and the type of data you are looking for (e.g., sequences, structures, functional annotations, etc.).

2. Choose the Appropriate Query Interface:

- Most biological databases provide web-based interfaces for querying and retrieving data. Additionally, some databases offer programmatic interfaces (APIs) for advanced users who want to automate queries using scripts or software.

3. Navigate to the Database Website:

- Open a web browser and go to the website of the chosen biological database. Common databases, such as GenBank, UniProt, or NCBI, have easily accessible websites.

4. Perform a Basic Search:

- Use the search box on the database’s homepage to enter your query. This may involve entering keywords, accession numbers, gene names, or other identifiers.

5. Refine Your Query:

- If your initial query returns too many results or is too broad, you can use advanced search options to refine your query. These options often include filters, Boolean operators (AND, OR, NOT), and search parameters.

6. Review the Search Results:

- After submitting your query, the database will return a list of results that match your search criteria. Review the results to identify the specific data entries you need.

7. Access Detailed Information:

- Click on a result to access detailed information about the data entry. This may include sequence data, annotations, references, and links to related data.

8. Download Data:

- If you want to download the data for further analysis, look for options to download the data in various formats (e.g., FASTA format for sequences, tab-delimited files for annotations).

9. Use Advanced Features:

- Explore advanced features offered by the database. This could include sequence alignment tools, BLAST searches, multiple sequence alignment, data visualization, and more.

10. Save Your Search and Results:

- Many databases allow you to save your search queries and results for future reference. This can be particularly useful for large or complex datasets.

11. Querying via APIs (Programmatic Access):

- For more advanced users and those who wish to automate data retrieval, databases often provide Application Programming Interfaces (APIs). APIs allow you to query the database and retrieve data programmatically using scripts or software.

12. Read Documentation and Tutorials:

- To make the most of a database, consult the database’s documentation and tutorials. These resources often provide guidance on effective searching and data retrieval techniques.

13. Keep Up with Updates:

- Biological databases are regularly updated to include new data and features. Stay informed about updates, as they may impact your research.

14. Cite the Database:

- If you use data from a biological database in your research or publications, it is important to properly cite the database according to its citation guidelines.

Remember that different databases may have unique interfaces and search capabilities, so it’s essential to become familiar with the specific database you’re working with. Additionally, as bioinformatics tools and databases continually evolve, staying up-to-date with the latest resources and techniques is crucial for efficient data access and querying in the field of biology.

Common bioinformatics software tools

Bioinformatics software tools are essential for analyzing and interpreting biological data, including genomic sequences, protein structures, gene expression profiles, and more. These tools range from sequence analysis software to structural biology programs and statistical packages. Here is a list of some common bioinformatics software tools:

1. Sequence Analysis:

- BLAST (Basic Local Alignment Search Tool): Used for comparing a query sequence against a database of known sequences to identify homologous sequences.

- EMBOSS (European Molecular Biology Open Software Suite): Provides a collection of command-line tools for sequence analysis, including sequence alignment, motif searching, and format conversion.

- ClustalW and MUSCLE: Tools for multiple sequence alignment, useful for comparing and aligning multiple DNA or protein sequences.

- BEDTools: A set of utilities for working with genomic intervals and sequences, including operations like intersecting, merging, and manipulating data in the BED format.

2. Genome Analysis:

- Genome Workbench (NCBI): A graphical tool for viewing and analyzing genome sequences and annotations.

- Artemis: Used for annotating bacterial genomes, including the visualization of sequence data and gene predictions.

- UCSC Genome Browser: Provides an interactive web-based platform for exploring and visualizing genomic data from various species.

3. Structural Biology:

- PyMOL: A molecular visualization tool for 3D structure analysis and rendering of proteins, nucleic acids, and small molecules.

- Chimera and ChimeraX: Tools for visualizing and analyzing molecular structures, including protein complexes and electron density maps.

- Modeller: Used for homology modeling and predicting the 3D structure of proteins based on known structures.

- BEDTools: Useful for operations on genomic intervals, such as overlap, intersection, and manipulation of data in the BED format.

- GATK (Genome Analysis Toolkit): Developed for variant discovery in high-throughput sequencing data, particularly for identifying single nucleotide polymorphisms (SNPs) and insertions/deletions (indels).

- Samtools: A suite of programs for manipulating and working with SAM (Sequence Alignment/Map) and BAM (Binary Alignment/Map) files commonly used in next-generation sequencing data analysis.

5. Transcriptomics and Gene Expression:

- DESeq2 and edgeR: Tools for differential gene expression analysis from RNA-seq data.

- Cufflinks and StringTie: Used for transcript assembly, quantification, and differential expression analysis of RNA-seq data.

6. Metagenomics and Microbiome Analysis:

- QIIME (Quantitative Insights Into Microbial Ecology): A popular tool for analyzing and visualizing microbial community data, particularly 16S rRNA sequencing data.

- Kraken and MetaPhlAn: Tools for taxonomic classification and profiling of metagenomic sequencing data.

- R and Bioconductor: R is a programming language for statistical analysis, and Bioconductor is a collection of R packages specifically designed for bioinformatics and genomics analysis.

- BioPython, BioPerl, and BioRuby: Libraries for scripting and automating bioinformatics tasks in Python, Perl, and Ruby, respectively.

8. Functional Annotation and Pathway Analysis:

- DAVID (Database for Annotation, Visualization, and Integrated Discovery): Used for functional annotation and enrichment analysis of gene lists.

- KEGG (Kyoto Encyclopedia of Genes and Genomes) and Reactome: Resources for pathway analysis and visualization of biological pathways.

These are just a few examples of the many bioinformatics software tools available. The choice of tools depends on the specific research needs, the type of biological data being analyzed, and the analysis tasks at hand. Researchers often combine multiple tools and workflows to tackle complex biological questions effectively.

Module 3: Sequence Analysis

Lesson 5: DNA Sequencing

DNA sequencing technologies have evolved significantly over the years, allowing researchers to read the genetic code with ever-increasing speed, accuracy, and cost-effectiveness. These technologies have transformed genomics, genetics, and various fields of biology and medicine. Here are some key DNA sequencing technologies:

- Sanger Sequencing (First Generation Sequencing):

- Developed by Frederick Sanger in the 1970s, it was the first widely used DNA sequencing method.

- It involves using chain-terminating nucleotide analogs (dideoxynucleotides) to stop DNA synthesis at specific positions, revealing the sequence.

- While accurate, it is relatively slow and costly, limiting its use for large-scale sequencing projects.

- Next-Generation Sequencing (NGS) or Second Generation Sequencing:

- NGS technologies emerged in the mid-2000s, revolutionizing DNA sequencing.

- These methods are characterized by high-throughput, parallel processing, and massively parallel sequencing of DNA fragments.

- Some commonly used NGS platforms include Illumina, Ion Torrent, and 454 Sequencing.

- Third Generation Sequencing:

- Third-generation sequencing technologies aim to overcome limitations of NGS, such as short read lengths.

- PacBio Sequencing (Single-Molecule Real-Time, SMRT): It uses single DNA molecules as templates and measures real-time incorporation of nucleotides, resulting in long reads.

- Oxford Nanopore Sequencing: This method passes DNA through nanopores and measures changes in electrical current as bases move through, providing long reads.

- Nanopore Sequencing:

- Nanopore sequencing, exemplified by Oxford Nanopore Technologies, allows direct sequencing of single DNA strands as they pass through a nanopore.

- It offers long read lengths and has applications in various fields, including metagenomics and real-time pathogen detection.

- Single-Cell Sequencing:

- Single-cell sequencing technologies enable the analysis of individual cells, revealing cellular heterogeneity.

- Single-cell RNA-seq (scRNA-seq) and single-cell DNA-seq (scDNA-seq) are examples of this approach.

- Long-Read Sequencing:

- Technologies like PacBio and Oxford Nanopore provide long reads, which are particularly useful for resolving complex regions of genomes, structural variants, and haplotypes.

- RNA Sequencing (RNA-seq):

- While primarily used for transcriptomics, RNA-seq can reveal gene expression patterns and alternative splicing events at the RNA level.

- Epigenomic Sequencing:

- Methods like ChIP-seq (Chromatin Immunoprecipitation Sequencing) and bisulfite sequencing are used to study epigenetic modifications such as DNA methylation and histone modifications.

- Metagenomic Sequencing:

- Metagenomic sequencing analyzes genetic material from environmental samples, enabling the study of complex microbial communities.

- Targeted Sequencing:

- Targeted sequencing approaches focus on specific regions of interest, such as exomes (exome sequencing), cancer-related genes (cancer panel sequencing), or custom target regions.

- Whole-Genome Sequencing (WGS):

- WGS involves sequencing the entire genome of an organism and provides a comprehensive view of genetic information.

- Clinical Sequencing:

- DNA sequencing is increasingly used in clinical settings for diagnosing genetic disorders, identifying disease-causing mutations, and guiding personalized medicine.

- Single-Molecule Sequencing:

- These technologies aim to sequence single DNA or RNA molecules directly, without the need for amplification.

DNA sequencing technologies continue to advance rapidly, with ongoing improvements in accuracy, read length, speed, and cost-effectiveness. These advancements are driving discoveries in genomics, genetics, and a wide range of biological and medical fields.

Sequence formats (FASTA, GenBank)

Sequence formats are standardized ways of representing biological sequences, such as DNA, RNA, and protein sequences, in a human-readable and computer-readable format. These formats facilitate the storage, sharing, and analysis of biological data. Two widely used sequence formats are FASTA and GenBank format:

1. FASTA Format:

FASTA (pronounced “fast-uh”) is a simple and widely used text-based format for representing biological sequences. It was developed by David J. Lipman and William R. Pearson in the early 1980s. FASTA format consists of two main parts:

- Header Line: The header line begins with a “>” (greater-than) symbol followed by a sequence identifier or description. This line provides information about the sequence, such as its name, source organism, or any other relevant details.

Example Header Line:

arduino>NM_001301717.1 Homo sapiens glucokinase (GCK), transcript variant 3, mRNA

- Sequence Data: The sequence data follows the header line and is composed of letters representing the sequence (A for adenine, T for thymine, G for guanine, C for cytosine, U for uracil in RNA, and single-letter amino acid codes for proteins).

Example Sequence Data:

ATGGAGAAGGCAACAGTTTTCATCCTGCTC...

FASTA format is versatile and can be used for nucleotide sequences (DNA or RNA) as well as protein sequences. It is easy to read and edit manually, making it a popular choice for sequence databases, sequence files, and bioinformatics tools.

2. GenBank Format:

GenBank format is a more structured and comprehensive format for storing biological sequence data. It was developed by the National Center for Biotechnology Information (NCBI) and is commonly used for sharing and archiving genetic information. GenBank format files typically have the “.gb” or “.gbk” file extension. GenBank format includes the following components:

- LOCUS Line: The LOCUS line provides metadata about the sequence, including its name, length, type (e.g., DNA, RNA, or protein), and other information.

Example LOCUS Line:

yamlLOCUS NM_001301717 2596 bp mRNA linear PRI 04-MAR-2023

- FEATURES Section: This section describes various features of the sequence, such as coding regions, genes, exons, and other annotations. Each feature is specified with its location on the sequence and additional details.

Example FEATURES Section (excerpt):

bashFEATURES Location/Qualifiers

CDS 23..1413

/product="glucokinase"

/gene="GCK"

- ORIGIN Section: The ORIGIN section contains the sequence data in a structured format, with line numbers and groups of nucleotides or amino acids.

Example ORIGIN Section (excerpt):

markdown1 ttatcctttt ttcttcacac tccagcagga tgctggctct gtaaggcagt ggaaga...

361 ctgcaattta ttttatttta ttttatttta ttttatttta ttttatttta ttttta...

GenBank format files also include additional information, such as references, comments, and source organism data. This format is commonly used for archiving and sharing sequences in public databases like GenBank, EMBL, and DDBJ.